Podroll

I love podcasts, but finding new ones is tough! I mostly rely on friend's recommendations. To make discovery easier, I'm sharing my personal podroll, which includes a variety of shows I enjoy. This list is frequently updated using a script, so check back often for new additions. You can find my podroll on Player.fm, a platform created by my friend Mike Mahemoff. Read More

I lead the Chrome Developer Relations team at Google.

We want people to have the best experience possible on the web without having to install a native app or produce content in a walled garden.

Our team tries to make it easier for developers to build on the web by supporting every Chrome release, creating great content to support developers on web.dev, contributing to MDN, helping to improve browser compatibility, and some of the best developer tools like Lighthouse, Workbox, Squoosh to name just a few.

I love to learn about what you are building, and how I can help with Chrome or Web development in general, so if you want to chat with me directly, please feel free to book a consultation.

I'm trialing a newsletter, you can subscribe below (thank you!)

Adding "dark mode" to my blog

I added dark mode to my blog! Inspired by Jeremy Keith, I used CSS custom properties and media queries to switch between light and dark themes based on the user's preference. I also included a fallback for browsers that don't support custom properties and a temporary CSS class for testing since Chrome DevTools didn't yet have dark mode emulation. Read More

Using Web Mentions in a static site (Hugo)

This blog post discusses how to integrate Webmentions into a statically generated website built with Hugo, hosted on Zeit. Static sites lack dynamic features like comments, often relying on third-party solutions. This post explores using Webmentions as a decentralized alternative to services like Disqus. It leverages webmention.io as a hub to handle incoming mentions and pingbacks, validating the source and parsing page content. The integration process involves adding link tags to HTML, incorporating the webmention.io API into the build process, and efficiently mapping mention data to individual files for Hugo templates. Finally, a cron job triggers regular site rebuilds via Zeit's deployment API, ensuring timely updates with new mentions. Read More

Creating a pop-out iframe with adoptNode and "magic iframes"

I explored the concept of "magic iframes" and using adoptNode to move iframes between windows. Initially, I thought I'd found a way to preserve iframe state during the move. However, after discussing with Jake Archibald, it turns out that appendChild already handles node adoption, making adoptNode redundant. Furthermore, moving iframes causes them to reload, negating the perceived benefit. While moving DOM elements between documents is still interesting, the original premise for iframes doesn't hold. The post includes a demo and discusses the potential of the <portal> API.

Read More

Meatspace Augmented Reality: From Chester to Nagoya

Some thoughts on AR after finding some during my travels. TL;DR - cheaper content creation and better discovery tools are needed. Read More

Photos from Carlisle Castle

Just got back from a trip to Carlisle Castle with the lads! It's a must-see if you're in the area. Learned a lot about its history in the conflicts between England and Scotland, which got me thinking about the potential impact of Brexit on Scotland's future, especially given Carlisle's proximity. I've included a few photos of the castle to give you a taste of what to expect. Read More

Idle observation: Indexing text in images

During a trip to Llangollen, I noticed that the historical information on local signs wasn't available online. This sparked an idea to make such information accessible on the web, especially for those with reading difficulties. I experimented with my existing image text extraction tool and found it works surprisingly well on these types of images. I'm now considering creating a website dedicated to archiving and indexing the text from informational signs, inspired by Google's Navlekhā project which helps offline Indian publishers digitize their content. Read More

Liverpool World Museum

I recently took my kids to the Liverpool World Museum. While some areas like the Space and Time section and the Bug enclosure were a bit underwhelming, the newly opened Egyptian exhibit was fantastic! Read More

Bookstore - Llangollen

I revisited a bookstore in Llangollen, located above a cafe, that I fondly remember from my childhood visits with my grandparents. It's charmingly unchanged, but I wish they had a larger selection of comics, like they did back then. Check out their website! Read More

Webmention.app — 🔗

I love the idea of Webmentions, yet I've not had the time to implement it on my site. At a high-level web mentions let you comment, like and reply to other content on the web and have it be visible to that content without being centralised with tools like Disqus (which I am keen to remove from my site).

Web Mentions are split in to two components, the sender and the receiver. The receiver is the site that I am writing a post about and they might have something on their site that shows inbound links or reactions to their blog; and the sender is, well, me. I need to let the remote site that I have written or reacted to some content that they have created.

The rather awesome Remy Sharp created webmention.app to solve one part of the problem: sending pings. Remy's tool makes it easy to send 'pings' to potential receivers that I have linked to, by simply calling a CLI script.

I host my blog using Zeit using Hugo and the static-builder tool, so it was relatively trivial for me to add in support for webmention app. I just npm i webmention and then call the CLI version of the tool from my build.sh file - it really is that simple.

Now when I create a post, it should send a quick ping to all new URL's that I have created some content about their site.

Creating a commit with multiple files to Github with JS on the web

I've created a simple UI for my static site and podcast creator that allows me to quickly post new content. It uses Firebase Auth, EditorJS, Octokat.js, and Zeit's Github integration. This post focuses on committing multiple files to Github using Octokat.js. The process involves getting a reference to the repo and the tip of the master branch, creating blobs for each file, creating a new tree with these blobs, and creating a commit that points to the new tree. The code handles authentication, creates blobs for images, audio (if applicable), and markdown content, and then creates the tree and commit. This setup allows me to have a serverless static CMS. Read More

Screen Recorder: recording microphone and the desktop audio at the same time — 🔗

I have a goal of building the worlds simplest screen recording software and I've been slowly noodling around on the project for the last couple of months (I mean really slowly).

In previous posts I had got the screen recording and a voice overlay by futzing about with the streams from all the input sources. One area of frustration though was that I could not work out how to get the audio from the desktop and overlay the audio from the speaker. I finally worked out how to do it.

Firstly, getDisplayMedia in Chrome now allows audio capture, there seems like an odd oversight in the Spec in that it did not allow you to specify audio: true in the function call, now you can.

const audio = audioToggle.checked || false;

desktopStream = await navigator.mediaDevices.getDisplayMedia({ video:true, audio: audio });

Secondly, I had originally thought that by creating two tracks in the audio stream I would be able to get what I wanted, however I learnt that Chrome's MediaRecorder API can only output one track, and 2nd, it wouldn't have worked anyway because tracks are like the DVD mutliple audio tracks in that only one can play at a time.

The solution is probably simple to a lot of people, but it was new to me: Use Web Audio.

It turns out that WebAudio API has createMediaStreamSource and createMediaStreamDestination, both of which are API's needed to solve the problem. The createMediaStreamSource can take streams from my desktop audio and microphone, and by connecting the two together into the object created by createMediaStreamDestination it gives me the ability to pipe this one stream into the MediaRecorder API.

const mergeAudioStreams = (desktopStream, voiceStream) => {

const context = new AudioContext();

// Create a couple of sources

const source1 = context.createMediaStreamSource(desktopStream);

const source2 = context.createMediaStreamSource(voiceStream);

const destination = context.createMediaStreamDestination();

const desktopGain = context.createGain();

const voiceGain = context.createGain();

desktopGain.gain.value = 0.7;

voiceGain.gain.value = 0.7;

source1.connect(desktopGain).connect(destination);

// Connect source2

source2.connect(voiceGain).connect(destination);

return destination.stream.getAudioTracks();

};

Simples.

The full code can be found on my glitch, and the demo can be found here: https://screen-record-voice.glitch.me/

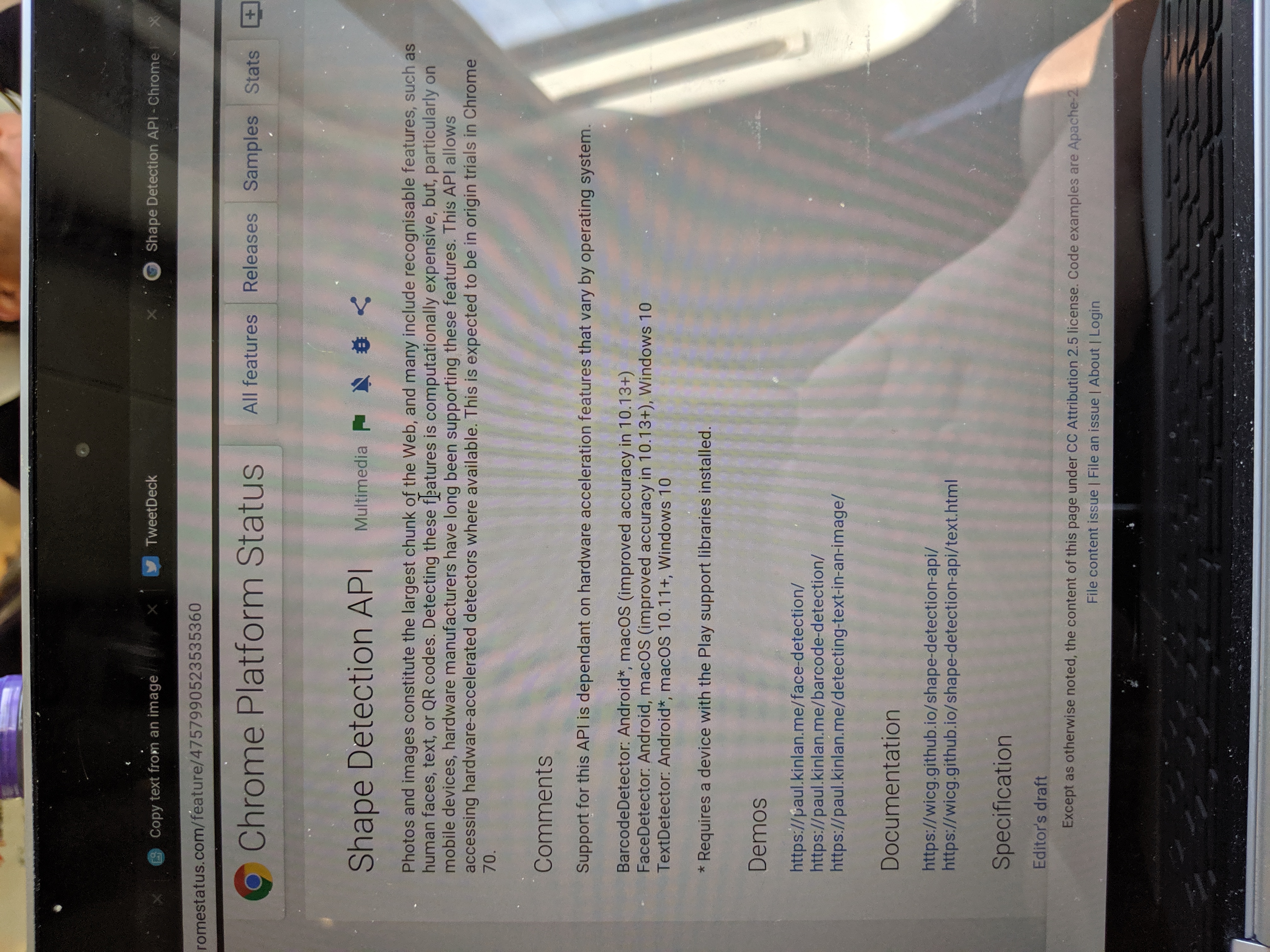

Extracting text from an image: Experiments with Shape Detection — 🔗

I had a little down time after Google IO and I wanted to scratch a long-term itch I've had. I just want to be able to copy text that is held inside images in the browser. That is all. I think it would be a neat feature for everyone.

It's not easy to add functionality directly into Chrome, but I know I can take advantage of the intent system on Android and I can now do that with the Web (or at least Chrome on Android).

Two new additions to the web platform - Share Target Level 2 (or as I like to call it File Share) and the TextDetector in the Shape Detection API - have allowed me to build a utility that I can Share images to and get the text held inside them.

The basic implementation is relatively straight forwards, you create a Share Target and a handler in the Service Worker, and then once you have the image that the user has shared you run the TextDetector on it.

The Share Target API allows your web application to be part of the native sharing sub-system, and in this case you can now register to handle all image/* types by declaring it inside your Web App Manifest as follows.

"share_target": {

"action": "/index.html",

"method": "POST",

"enctype": "multipart/form-data",

"params": {

"files": [

{

"name": "file",

"accept": ["image/*"]

}

]

}

}

When your PWA is installed then you will see it in all the places where you share images from as follows:

The Share Target API treats sharing files like a form post. When the file is shared to the Web App the service worker is activated the fetch handler is invoked with the file data. The data is now inside the Service Worker but I need it in the current window so that I can process it, the service knows which window invoked the request, so you can easily target the client and send it the data.

self.addEventListener('fetch', event => {

if (event.request.method === 'POST') {

event.respondWith(Response.redirect('/index.html'));

event.waitUntil(async function () {

const data = await event.request.formData();

const client = await self.clients.get(event.resultingClientId || event.clientId);

const file = data.get('file');

client.postMessage({ file, action: 'load-image' });

}());

return;

}

...

...

}

Once the image is in the user interface, I then process it with the text detection API.

navigator.serviceWorker.onmessage = (event) => {

const file = event.data.file;

const imgEl = document.getElementById('img');

const outputEl = document.getElementById('output');

const objUrl = URL.createObjectURL(file);

imgEl.src = objUrl;

imgEl.onload = () => {

const texts = await textDetector.detect(imgEl);

texts.forEach(text => {

const textEl = document.createElement('p');

textEl.textContent = text.rawValue;

outputEl.appendChild(textEl);

});

};

...

};

The biggest issue is that the browser doesn't naturally rotate the image (as you can see below), and the Shape Detection API needs the text to be in the correct reading orientation.

I used the rather easy to use EXIF-Js library to detect the rotation and then do some basic canvas manipulation to re-orientate the image.

EXIF.getData(imgEl, async function() {

// http://sylvana.net/jpegcrop/exif_orientation.html

const orientation = EXIF.getTag(this, 'Orientation');

const [width, height] = (orientation > 4)

? [ imgEl.naturalWidth, imgEl.naturalHeight ]

: [ imgEl.naturalHeight, imgEl.naturalWidth ];

canvas.width = width;

canvas.height = height;

const context = canvas.getContext('2d');

// We have to get the correct orientation for the image

// See also https://stackoverflow.com/questions/20600800/js-client-side-exif-orientation-rotate-and-mirror-jpeg-images

switch(orientation) {

case 2: context.transform(-1, 0, 0, 1, width, 0); break;

case 3: context.transform(-1, 0, 0, -1, width, height); break;

case 4: context.transform(1, 0, 0, -1, 0, height); break;

case 5: context.transform(0, 1, 1, 0, 0, 0); break;

case 6: context.transform(0, 1, -1, 0, height, 0); break;

case 7: context.transform(0, -1, -1, 0, height, width); break;

case 8: context.transform(0, -1, 1, 0, 0, width); break;

}

context.drawImage(imgEl, 0, 0);

}

And Voila, if you share an image to the app it will rotate the image and then analyse it returning the output of the text that it has found.

It was incredibly fun to create this little experiment, and it has been immediately useful for me. It does however, highlight the inconsistency of the web platform. These API's are not available in all browsers, they are not even available in all version of Chrome - this means that as I write this article Chrome OS, I can't use the app, but at the same time, when I can use it... OMG, so cool.

Small shrine in Engakuji Temple near Kamakura

This photo captures a small, serene shrine nestled within the grounds of Engakuji Temple, located near Kamakura, Japan. The image highlights the traditional Japanese architecture and the peaceful atmosphere of this sacred space. Read More

Wood Carving found in Engakuji Shrine near Kamakura

Discovered this incredible wood carving at Engakuji Shrine near Kamakura! More details to come soon. Read More

Sakura

This photo features a beautiful Yaezakura cherry blossom, a specific type of sakura known for its many layered petals. The image captures the delicate pink blossoms, celebrating the beauty of spring in Japan. Read More

Debugging Web Pages on the Nokia 8110 with KaiOS using Chrome OS

This blog post provides a guide on how to debug web pages on the Nokia 8110 (KaiOS) using Chrome OS with Crostini (m75 or later). It builds upon a previous post about using Web IDE for debugging KaiOS devices but focuses on using a Chrome OS environment. The guide outlines the necessary steps, including enabling Crostini USB support, installing required packages like USB tools, ADB, and Fastboot, and configuring udev rules to allow Chrome OS to recognize the Nokia 8110. The post includes commands for installing dependencies and verifying device connectivity. Read More

New WebKit Features in Safari 12.1 | WebKit — 🔗

Big updates for the latest Safari!

I thought that this was a pretty huge announcement, and the opposite of Google which a while ago said that Google Pay Lib is the recommend way to implement payments... I mean, it's not a million miles away, Google Pay is built on top of Payment Request, but it's not PR first.

Payment Request is now the recommended way to pay implement Apple Pay on the web.

And my favourite feature given my history with Web Intents.

Web Share API

The Web Share API adds navigator.share(), a promise-based API developers can use to invoke a native sharing dialog provided the host operating system. This allows users to share text, links, and other content to an arbitrary destination of their choice, such as apps or contacts.

Now just to get Share Target API and we are on to a winner! :)

Offline fallback page with service worker — 🔗

Years ago, I did some research into how native applications responded to a lack of network connectivity. Whilst I've lost the link to the analysis (I could swear it was on Google+), the overarching narrative was that many native applications are inextricably tied to the internet that they just straight up refuse to function. Sounds like a lot of web apps, the thing that set them apart from the web though is that the experience was still 'on-brand', Bart Simpson would tell you that you need to be online (for example), and yet for the vast majority of web experiences you get a 'Dino' (see chrome://dino).

We've been working on Service Worker for a long time now, and whilst we are seeing more and more sites have pages controlled by a Service Worker, the vast majority of sites don't even have a basic fallback experience when the network is not available.

I asked my good chum Jake if we have any guindance on how to build a generic fall-back page on the assumption that you don't want to create an entirely offline-first experience, and within 10 minutes he had created it. Check it out.

For brevity, I have pasted the code in below because it is only about 20 lines long. It caches the offline assets, and then for every fetch that is a 'navigation' fetch it will see if it errors (because of the network) and then render the offline page in place of the original content.

addEventListener('install', (event) => {

event.waitUntil(async function() {

const cache = await caches.open('static-v1');

await cache.addAll(['offline.html', 'styles.css']);

}());

});

// See https://developers.google.com/web/updates/2017/02/navigation-preload#activating_navigation_preload

addEventListener('activate', event => {

event.waitUntil(async function() {

// Feature-detect

if (self.registration.navigationPreload) {

// Enable navigation preloads!

await self.registration.navigationPreload.enable();

}

}());

});

addEventListener('fetch', (event) => {

const { request } = event;

// Always bypass for range requests, due to browser bugs

if (request.headers.has('range')) return;

event.respondWith(async function() {

// Try to get from the cache:

const cachedResponse = await caches.match(request);

if (cachedResponse) return cachedResponse;

try {

// See https://developers.google.com/web/updates/2017/02/navigation-preload#using_the_preloaded_response

const response = await event.preloadResponse;

if (response) return response;

// Otherwise, get from the network

return await fetch(request);

} catch (err) {

// If this was a navigation, show the offline page:

if (request.mode === 'navigate') {

return caches.match('offline.html');

}

// Otherwise throw

throw err;

}

}());

});

That is all. When the user is online they will see the default experience.

And when the user is offline, they will get the fallback page.

I find this simple script incredibly powerful, and yes, whilst it can still be improved, I do believe that even just a simple change in the way that we speak to our users when there is an issue with the network has the ability to fundamentally improve the perception of the web for users all across the globe.

Update Jeffrey Posnick kinldy reminded me about using Navigation Preload to not have to wait on SW boot for all requests, this is especially important if you are only controlling failed network requests.

testing block image upload

I successfully tested the image upload feature, evidenced by the accompanying image. If you see this post and the image, my test was successful! Read More