What is this?

This is my Software Engineering final year project for University from about 2003. I used to be work in the Fraud Detection industry (mortgage and credit card fraud) and this project was to solve a problem that I had found in the telecoms industry: fraudulent phone calls. It was impossible (for me) to get phone records from telecoms companies, so I had to build a tool that would model fraudulent calls and normal call patterns, I then had to build a tool that would detect calls that were fradulent from all of the call records.

The detector, iirc was a Multilayered perceptron Neural network, and it worked a little too well (which suggests my modelling was not adequaute.).

Anyway I learnt a lot.

Why are you posting this?

For fun mostly. I recently got linked to a project on Github that this project inspired, which then lead me down a hole that I had to follow, which lead me to finding my project and then I needed to post it.

https://github.com/mayconbordin/cdr-gen is the project that was based on my call detail record generator that was written in MS Access. :D

Enjoy the project!!

Note: Many of the TOC links don't seem to work :)

An Investigation into Real-time Fraud Detection in the Telecommunications Industry

Project Tutor

Dr Abir Hussain

Contents Page

7.2 Investigation into the Telecommunications Industry 14

7.2.1 Mobile Phone Telephony: 14

7.2.2 Fixed Line Telephony: 15

7.3. Investigation into Fraud 21

7.3.1 Who suffers from fraud? 21

7.4 Investigation of Fraud in the Telecommunication Industry 23

7.4.1 What is Telecommunication Fraud? 23

7.4.2 What does this mean to the Telecomm companies? 24

7.4.3 How is Fraud Perpetrated? 25

7.4.4 How do Telecomm Companies Respond to Fraud? 29

7.4.5 Some Key Attributes which may Identify Fraud. 30

7.5 Methods to Detect Fraud 31

7.5.1 Why Call Pattern Analysis is not always enough 41

7.6 Consideration of Real Time Methods 42

8 IDENTIFICATION OF PROBLEM AND SPECIFICATION 43

8.2 System Tools Research and Requirements 45

8.2.1 Further Requirements for the CDR Tool and Development Tool Research 46

8.2.2 Further Requirements for the Fraud Detection Prototype and Development Tool Research 49

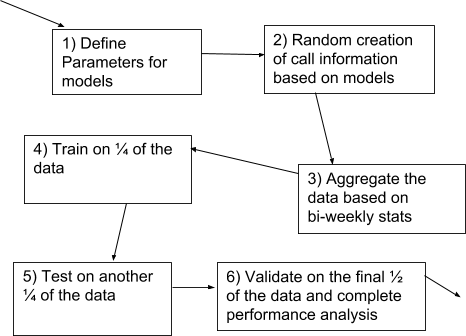

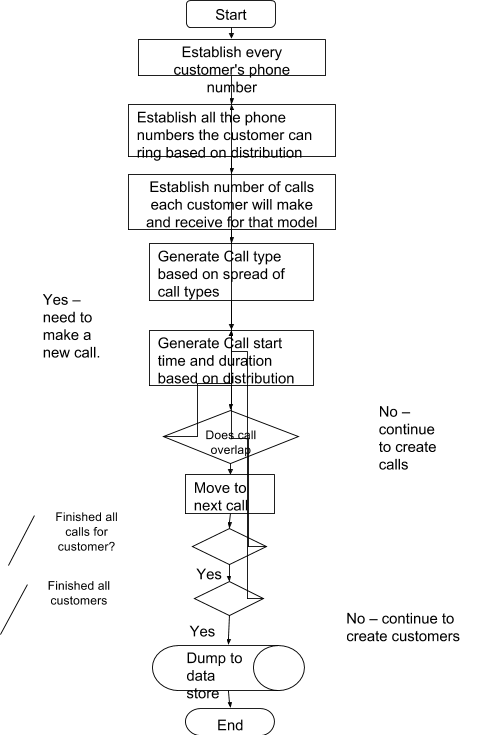

9.3.1 Flow of Data When Creating a Model 62

9.3.2 Consideration of the UI 64

9.3.4 Data Representation and Considerations 64

9.3.4.1 Internal Data Representation 64

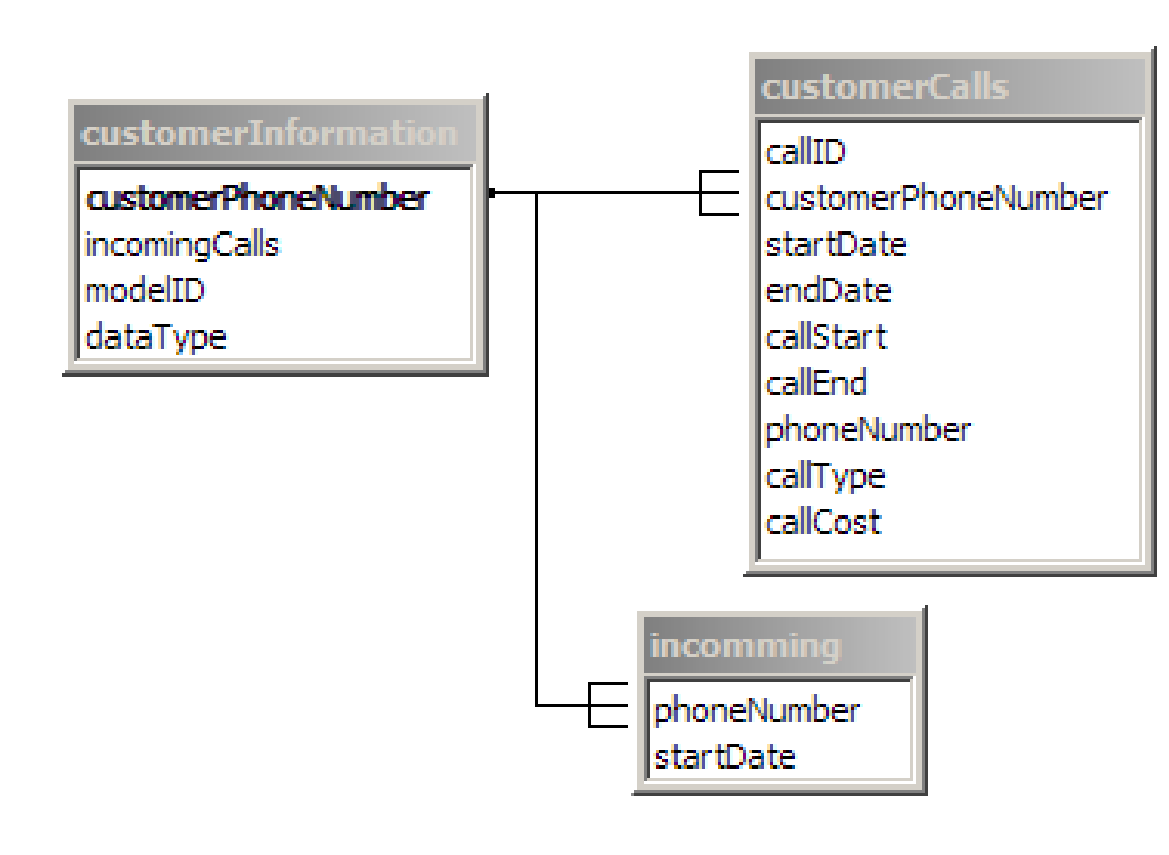

9.3.4.2 Customer Information 64

9.3.4.3 Entity Relationship 65

9.3.4.5 Index Considerations 67

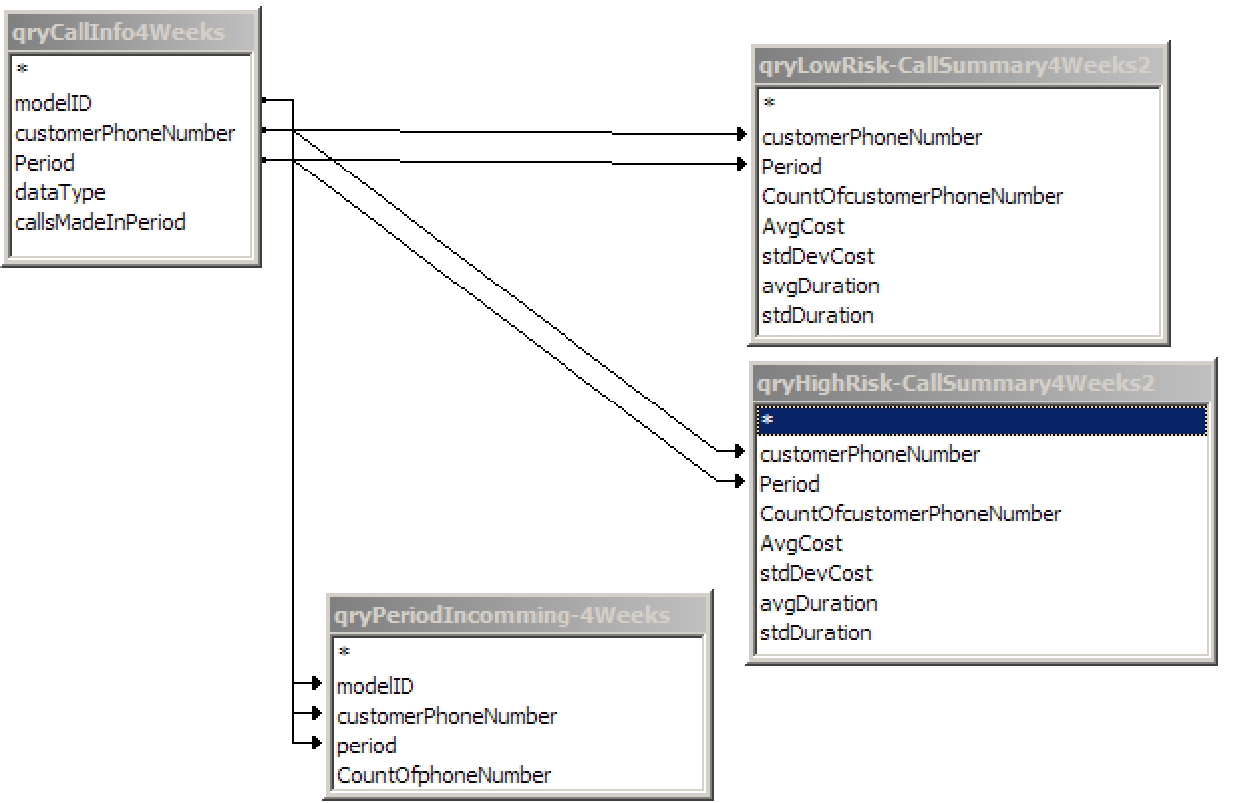

9.3.4.6 Aggregating the Data 67

9.3.4.8 Testing the Model Generator. 69

9.5.1 What is a neural network? 72

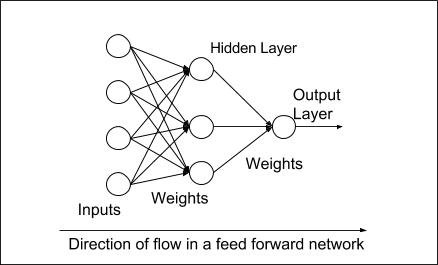

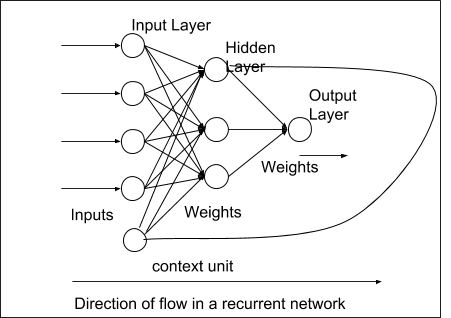

9.5.2 Types of Neural Networks 76

9.5.3 What Neural network to use? 78

9.5.4 Training a Neural Network. 79

9.5.5 Training Method for the Feed forward Network 83

9.5.6 Problems Which can be Encounter when Training 84

9.5.7 Inputs defined in the NN. 85

9.5.9 Consideration of the Data Being Presented to the Network 89

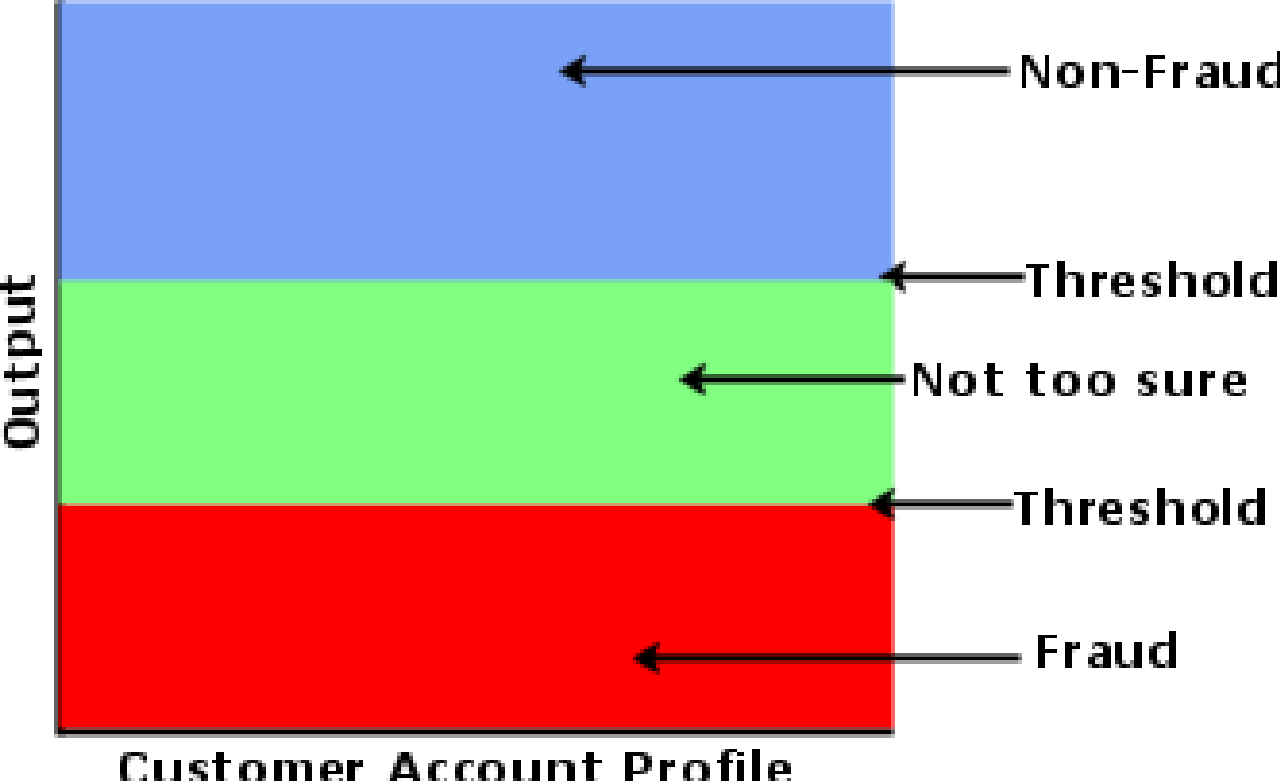

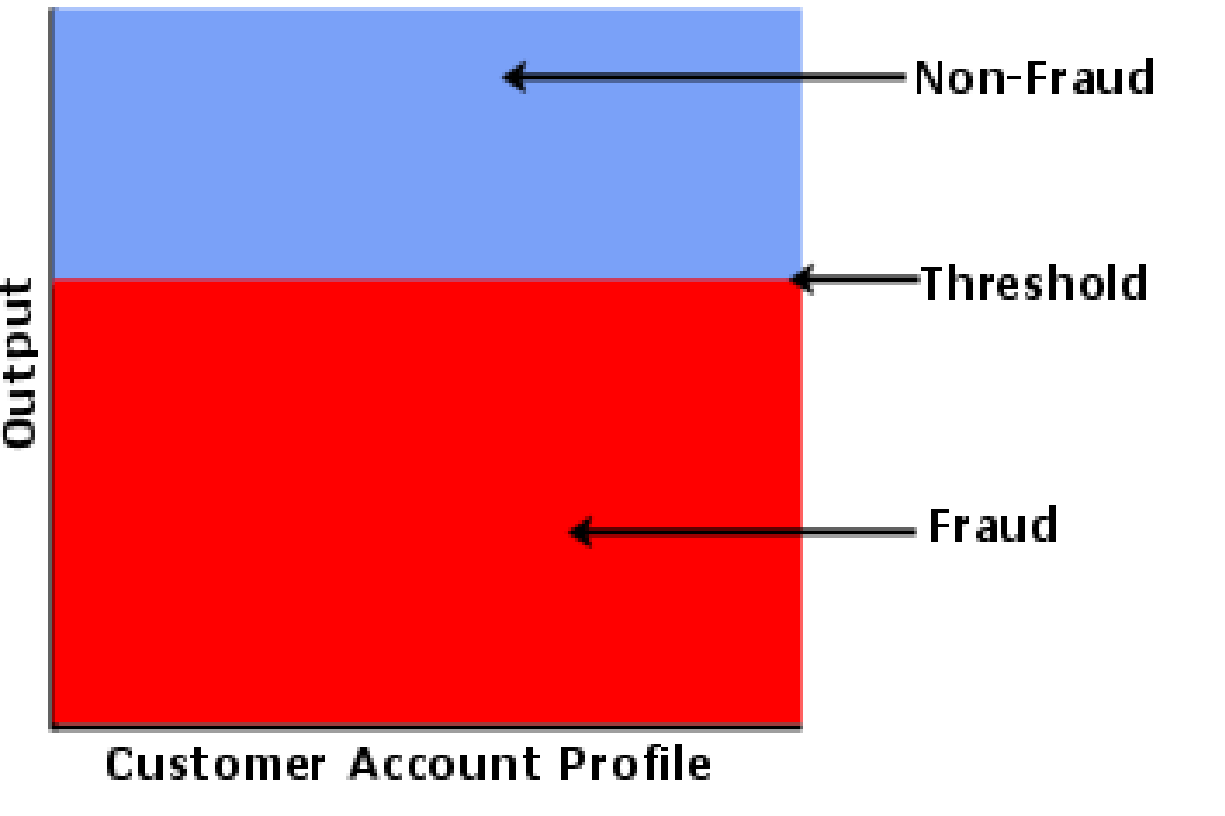

9.5.10 Consideration of the Output of the Network. 90

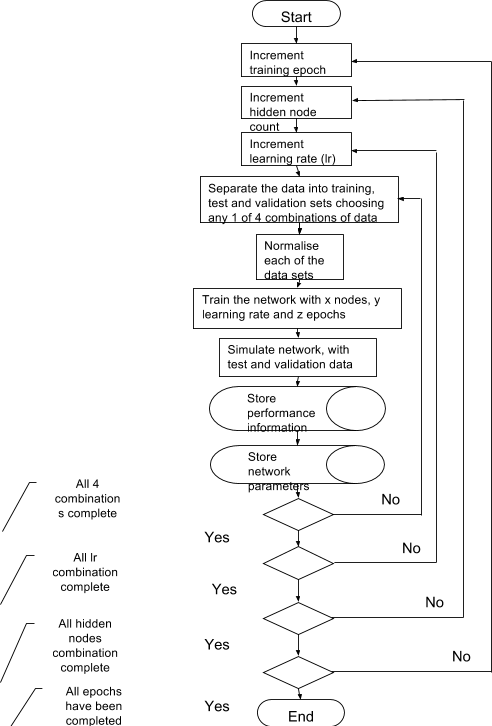

9.6 Neural Network Creation Tools Design 92

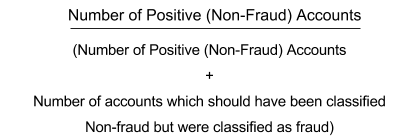

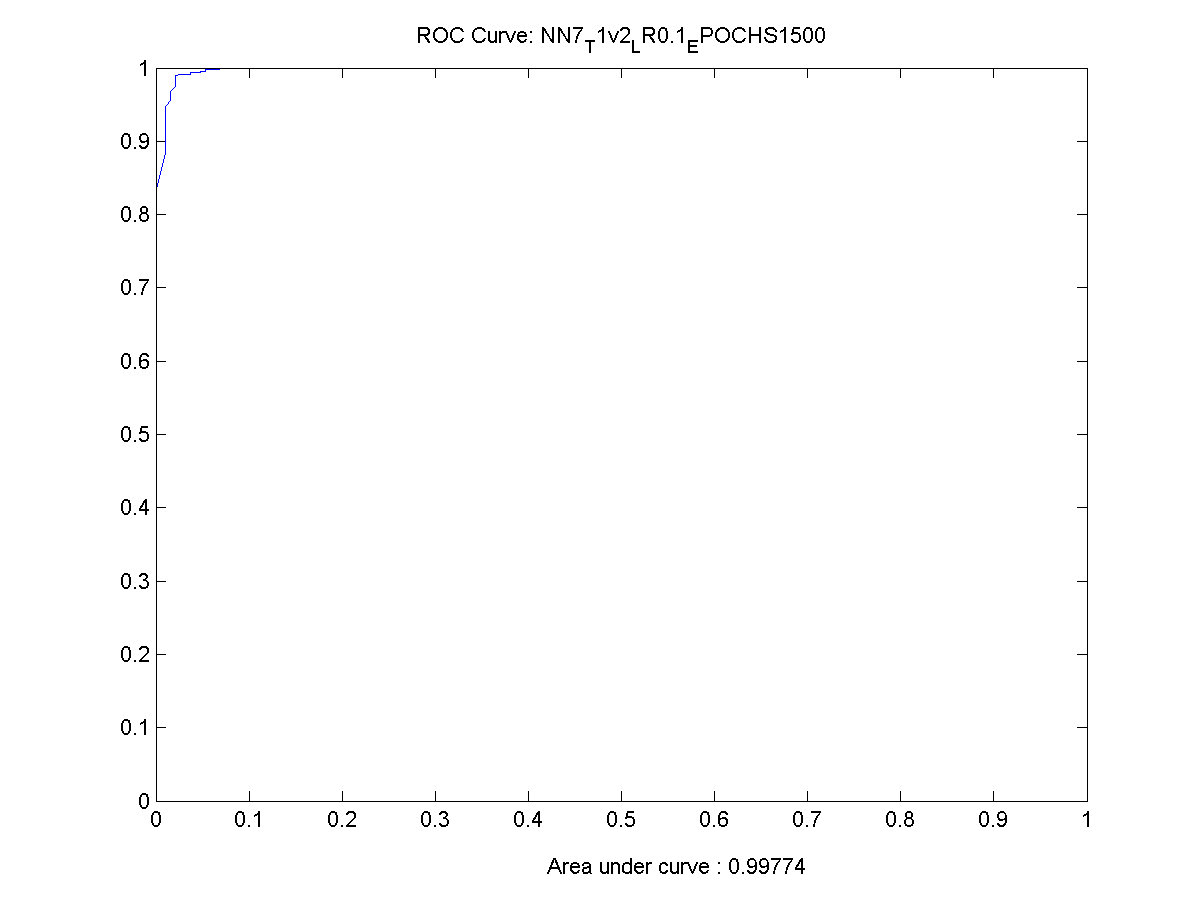

9.6.3 Performance Analysis and Testing 95

9.6.4 Establishing the Most Appropriate Threshold for the Final Network. 101

9.6.4 Testing the Network Creation Tool. 102

9.7.1 Methods to generate the best models. 103

9.7.2 Brief discussion about the models used. 104

10.1 Overview of how to study the graphs 107

10.8.1 The weights from the input layer to the hidden node 122

10.8.2 The weights to the Output Layer 122

10.8.4 Proposed Training Regime 122

11.3 Which Training Method was Most Appropriate 125

11.4 Other Points About the Neural Network 125

13.1 How I handled the project 127

16.2.1.1 How to read the performance information off the CD 141

16.2.1.2 Function Descriptions 142

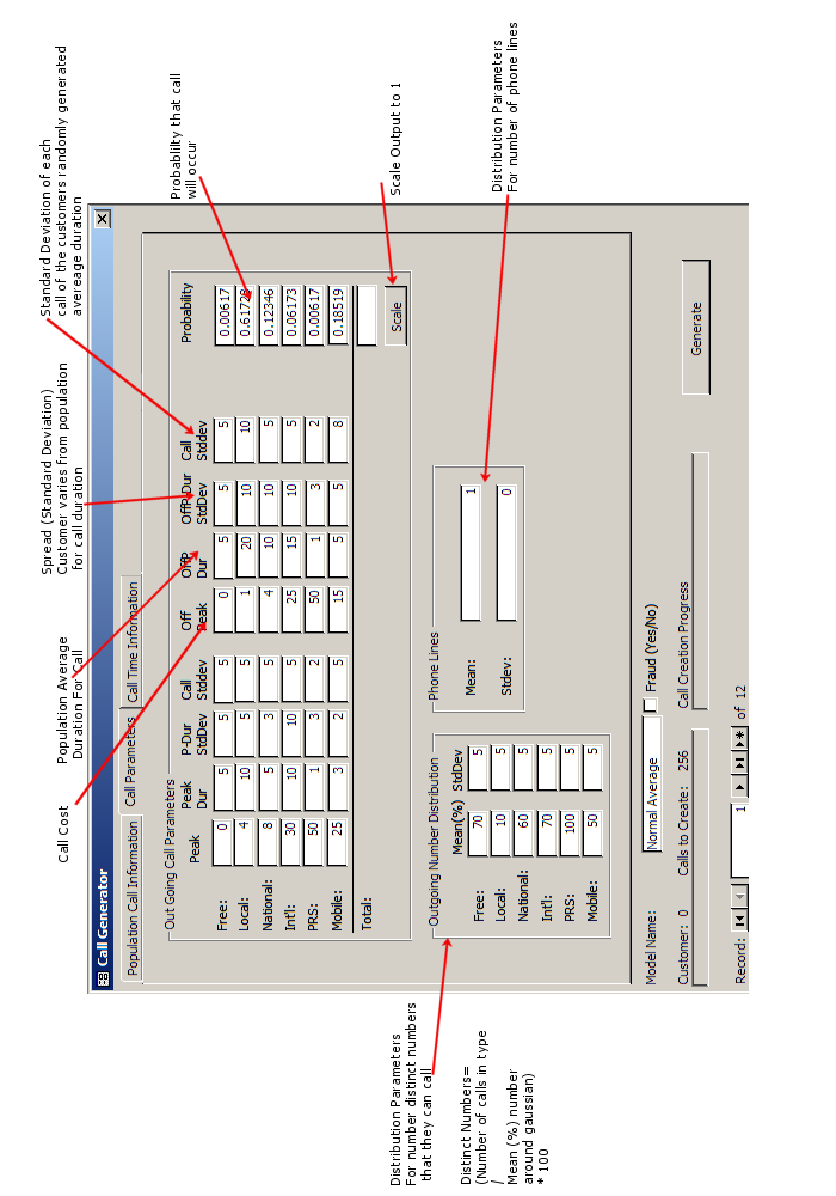

16.3 CDR Generation Tool Screen Shots 146

16.4.2 Neural Network Tools 152

16.6.2 Time Plan (Interim) 160

16.7 Interim Report & Specification 162

1. Abstract

An investigation into fraud detection in the telecom industry with a focus on development of a tool to help aid the detection process.

Neural networks were employed to find anomalous call patterns for customers over two week periods which matched call patterns of previously known fraud.

Customer information was generated using a bespoke tool and a final neural network was produced after rigorous testing which can successfully classify fraudulent and non fraudulent activity of customers.

Keywords: Fraud Detection, Software Engineering, Customer Detail Record, Database, Neural Network

2. Acknowledgements

I have enjoyed working on this project and I would like to thank my parents and family for the help and support that they have given me throughout this year.

I would also like to take this opportunity to thank Dr Abir Hussain for the help and support that she has given me as a project tutor this year.

I hope this report shows the amount of work and effort that went into this project during my final year studies.

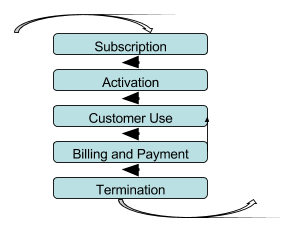

3. List of FiguresFigure 1 Process of a customer of a telecomm company 18

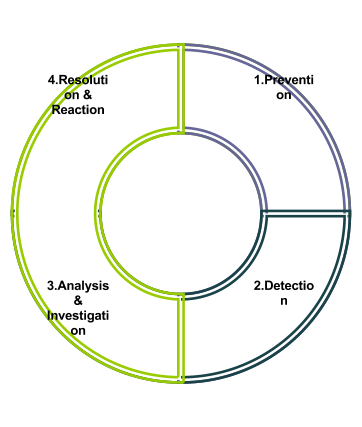

Figure 2 The Fraud Management Cycle 29

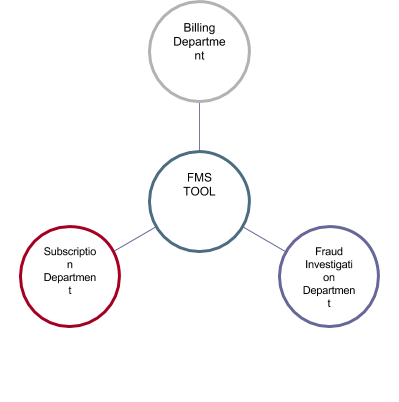

Figure 3 Roles where an FMS Tool maybe used 32

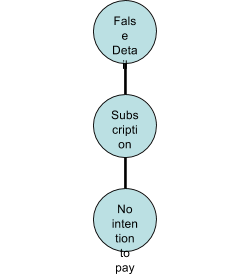

Figure 4 Subscription Fraud 33

Figure 6 A) Non-linear problem separation B) Added Dimensions 39

Figure 7 Normal Linear Sequential Model (Waterfall) 43

Figure 8 Amended Linear Sequential Model (Waterfall) 43

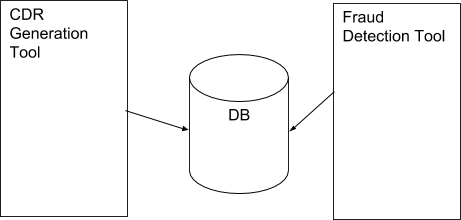

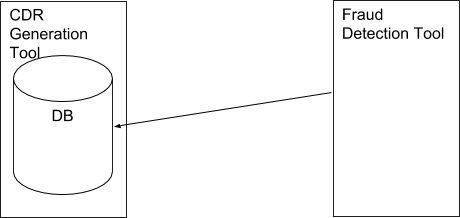

Figure 9 Standard model for database communication 47

Figure 10 An Ideal situation for CDR Tool and Fraud Detection Tool 49

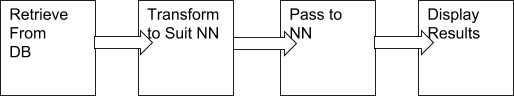

Figure 11 Processing the data through a neural network 49

Figure 12 Abstract overview of data flow in the system 53

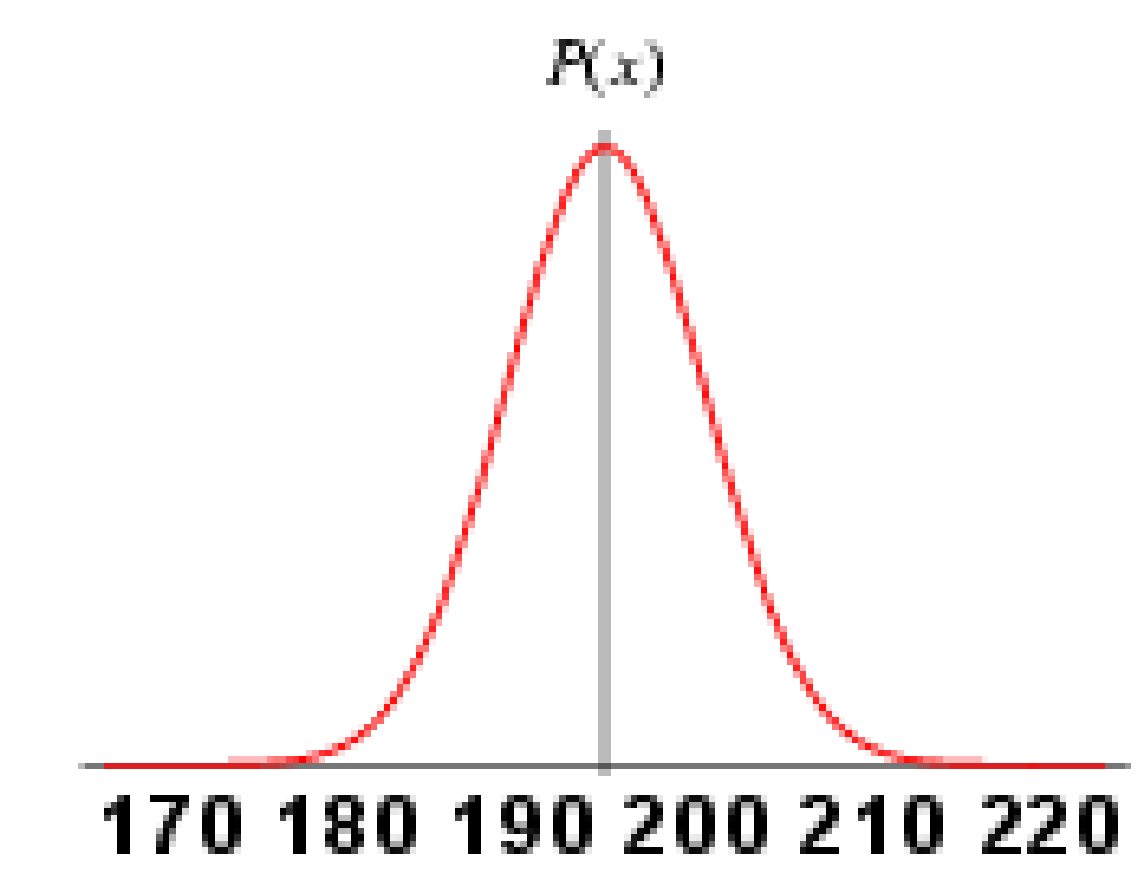

Figure 13 A Gaussian distribution based on male heights in the UK 55

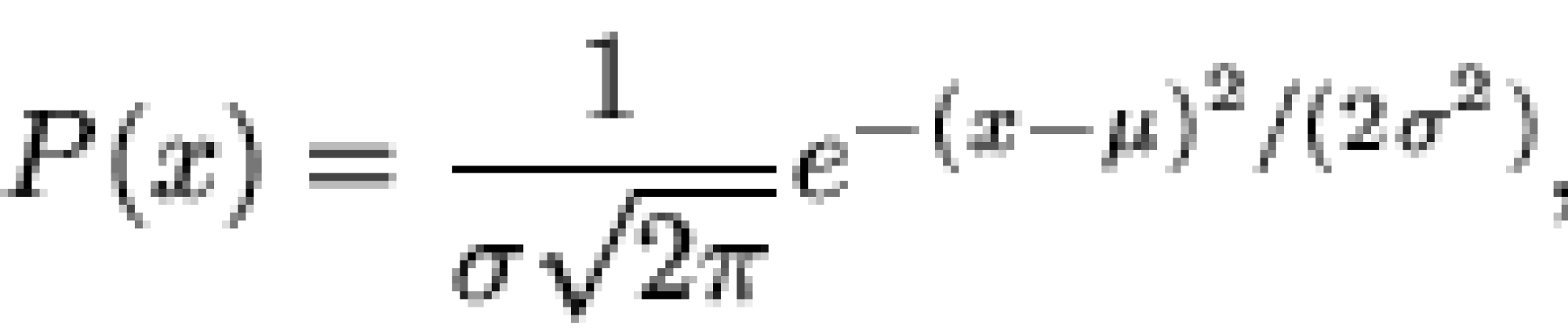

Figure 14 The Gaussian distribution function 56

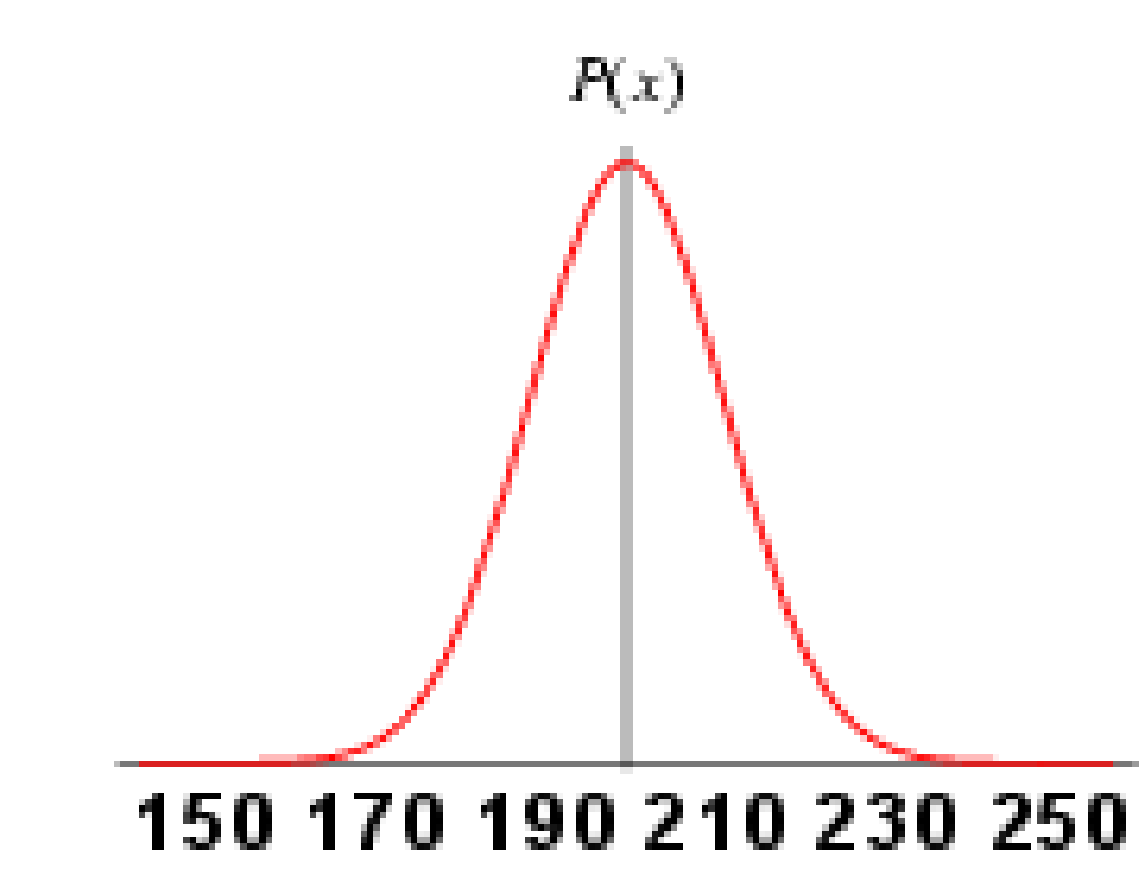

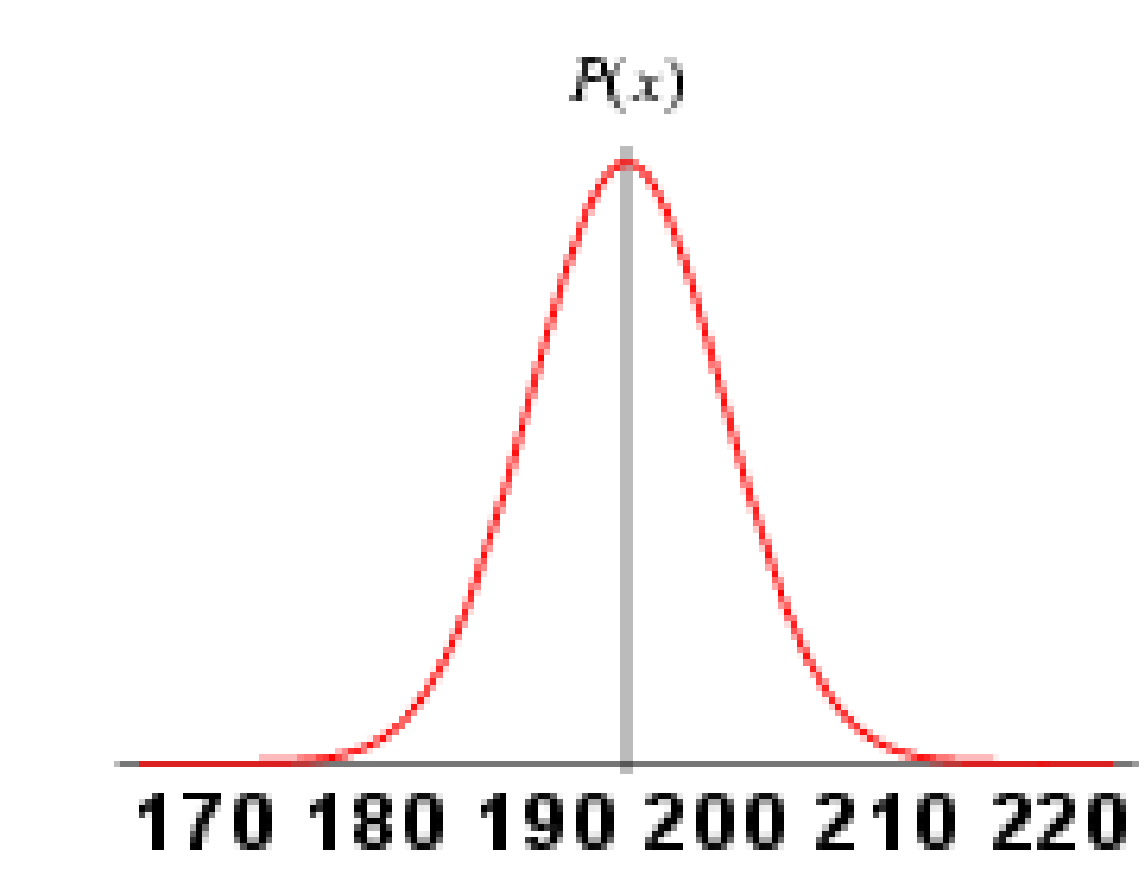

Figure 15 Gaussian Distribution A 57

Figure 16 Gaussian Distribution B 57

Figure 18 Customer Generate tool flow diagram 63

Figure 19 Basic Entity Relationship for customer information 65

Figure 20 Overview of tables, fields and relevant joins used in the final output query 67

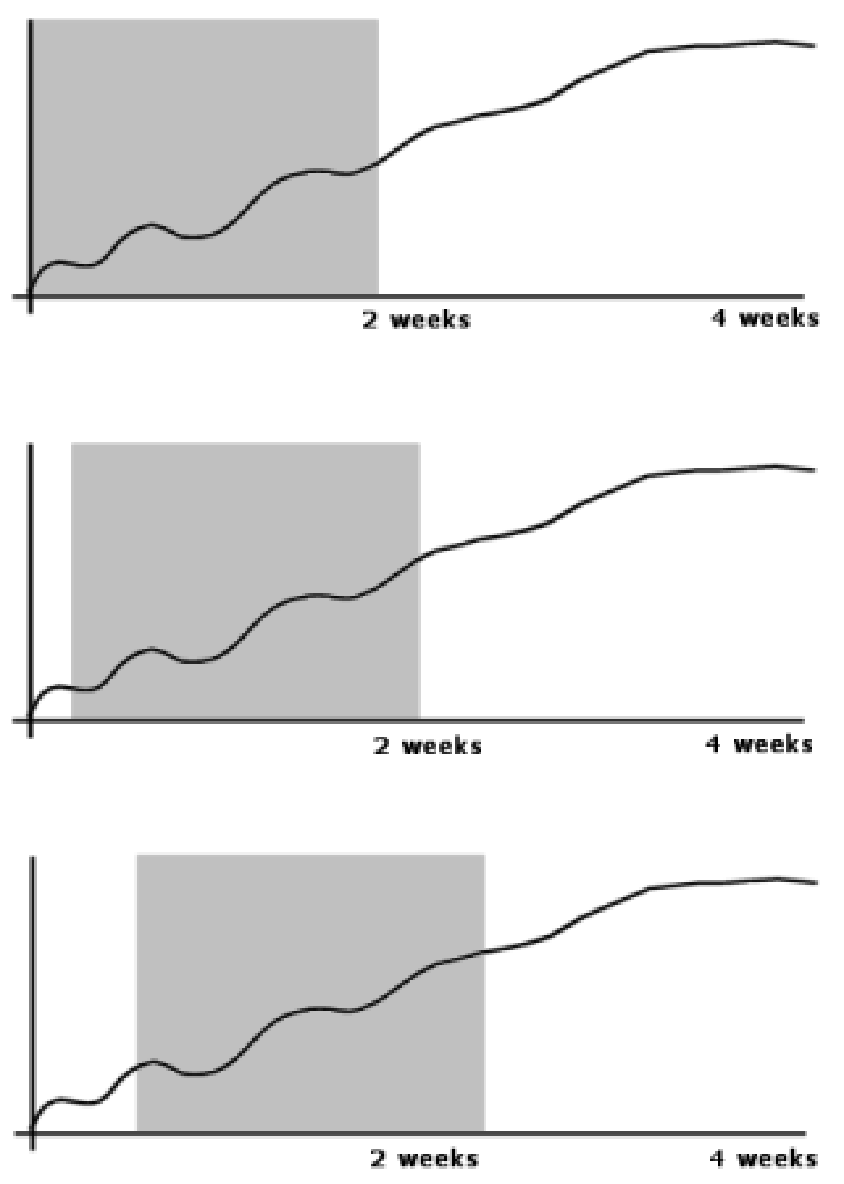

Figure 21 Sliding Window Effect 68

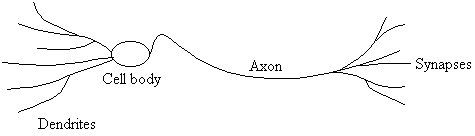

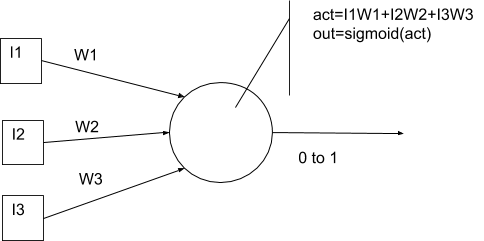

Figure 23 An artificial neuron based on Binary Threshold Logic Unit 73

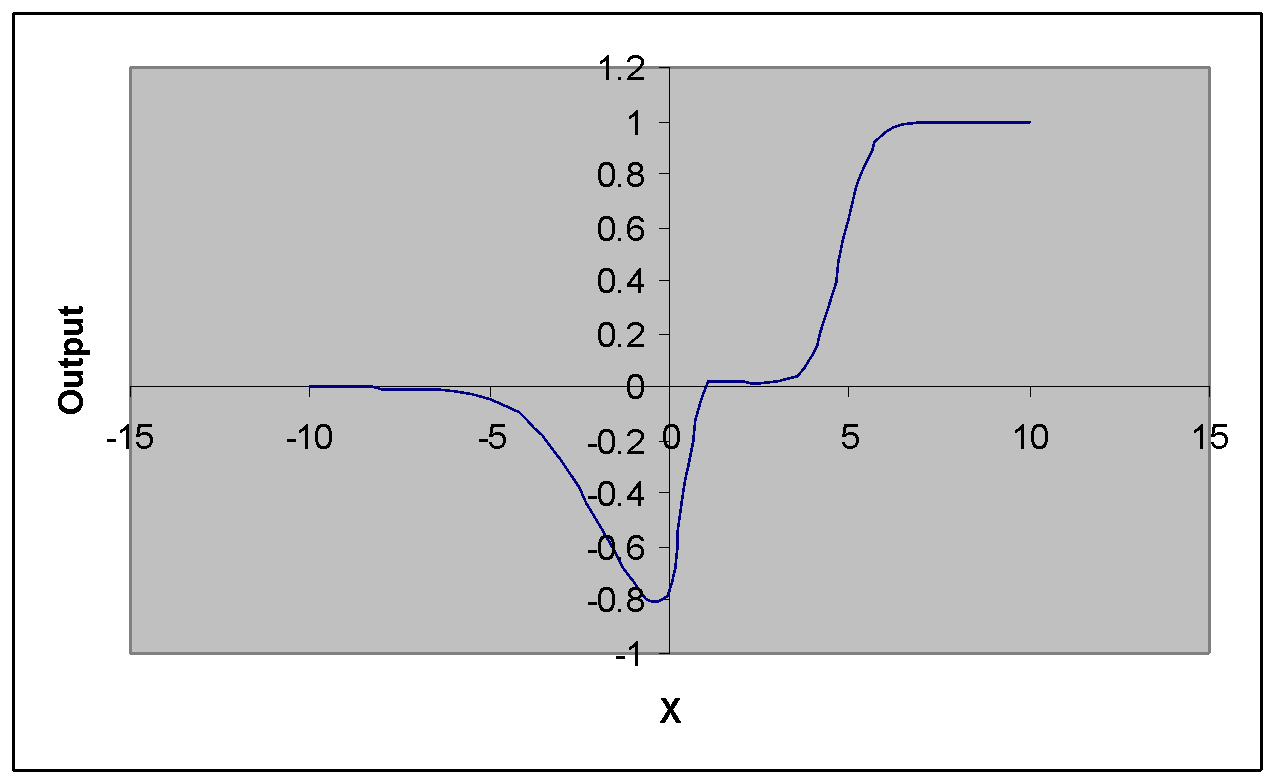

Figure 24 Logistic Sigmoid function & Tan Sigmoid function 74

Figure 25 An artificial neuron based on a continuous sigmoid output function 74

Figure 26 Combining logsig(5x-2) + logsig(x+2) – logsig(2½x -12 ) 75

Figure 27 The Feed forward Neural Network 76

Figure 28 A Recurrent Network 77

Figure 29 Single Threshold system 90

Figure 30 Dual Threshold System 91

Figure 31 Training Tool Data Flow 94

Figure 32 Data extraction tool data flow 95

Figure 33 Y-Axis for ROC Chart (Sensitivity) 97

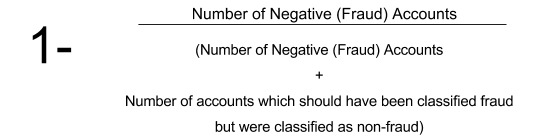

Figure 34 X-Axis for ROC Chart (1 - Specifity) 97

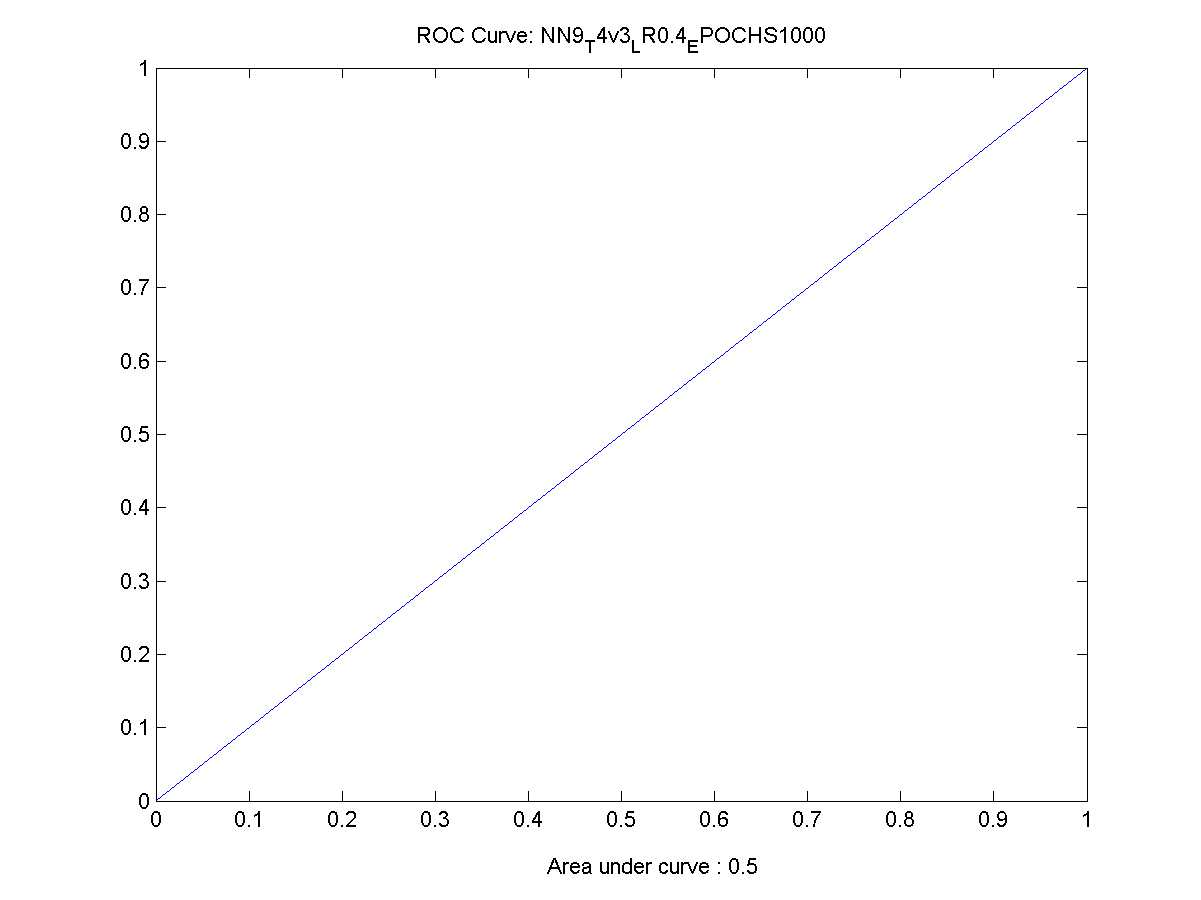

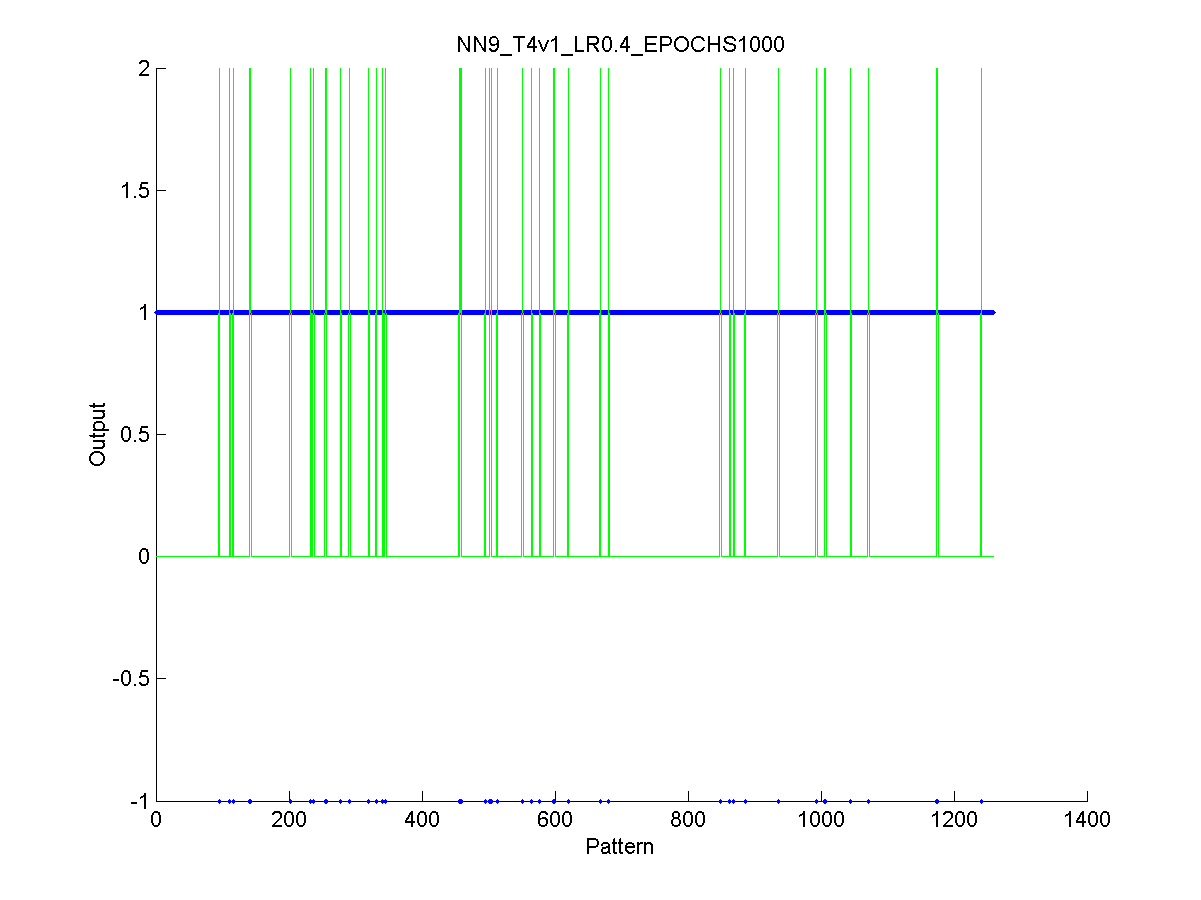

Figure 35 An incorrectly trained neural network ROC depiction 98

Figure 36 Actual output of an incorrectly trained network 99

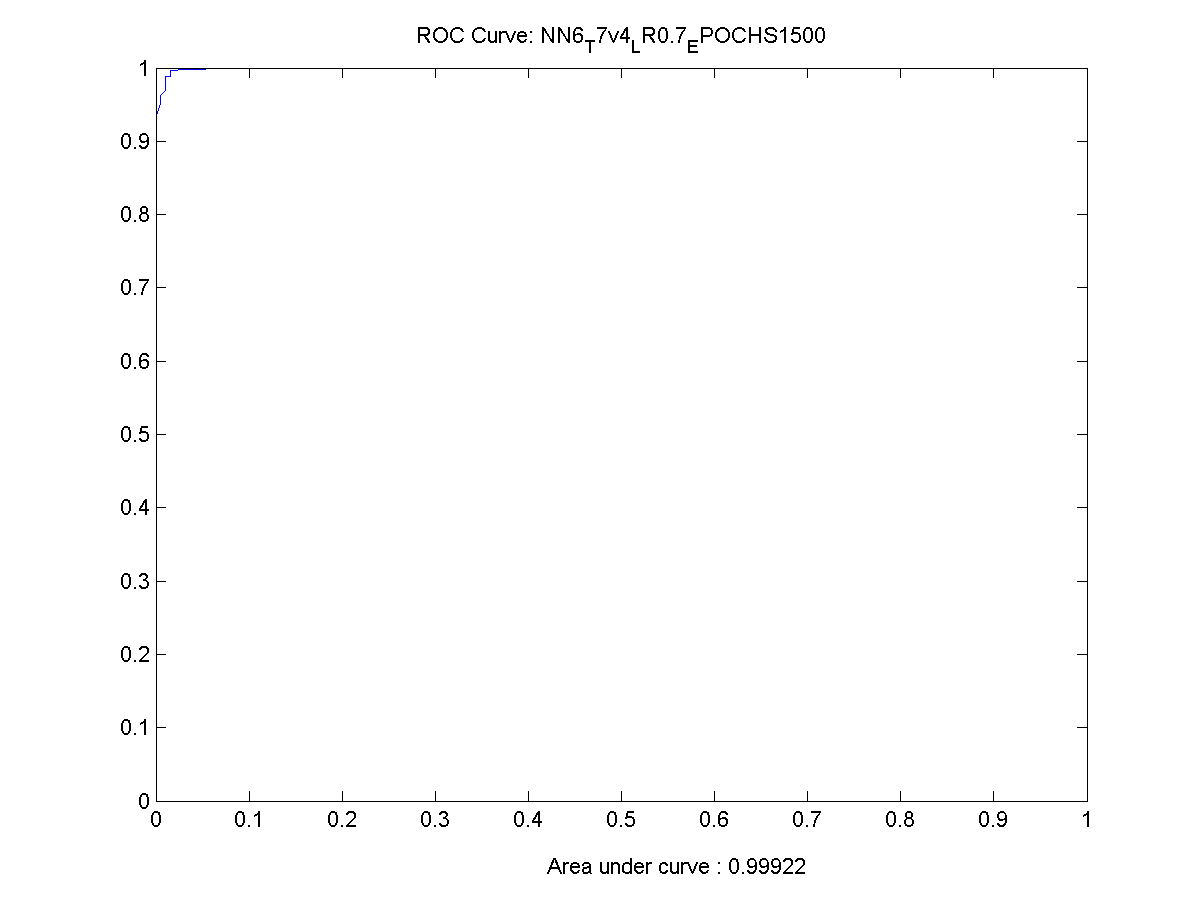

Figure 37 ROC Chart for a working neural network 99

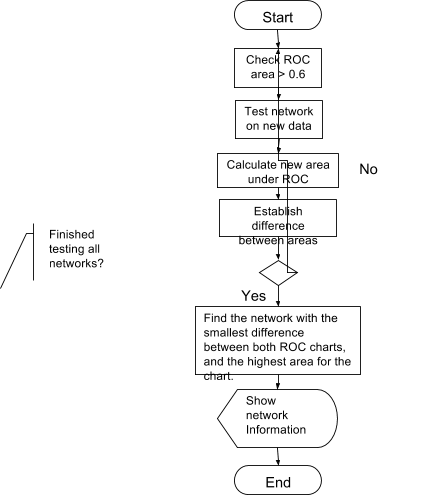

Figure 38 Data flow for establish the performance of the neural networks 101

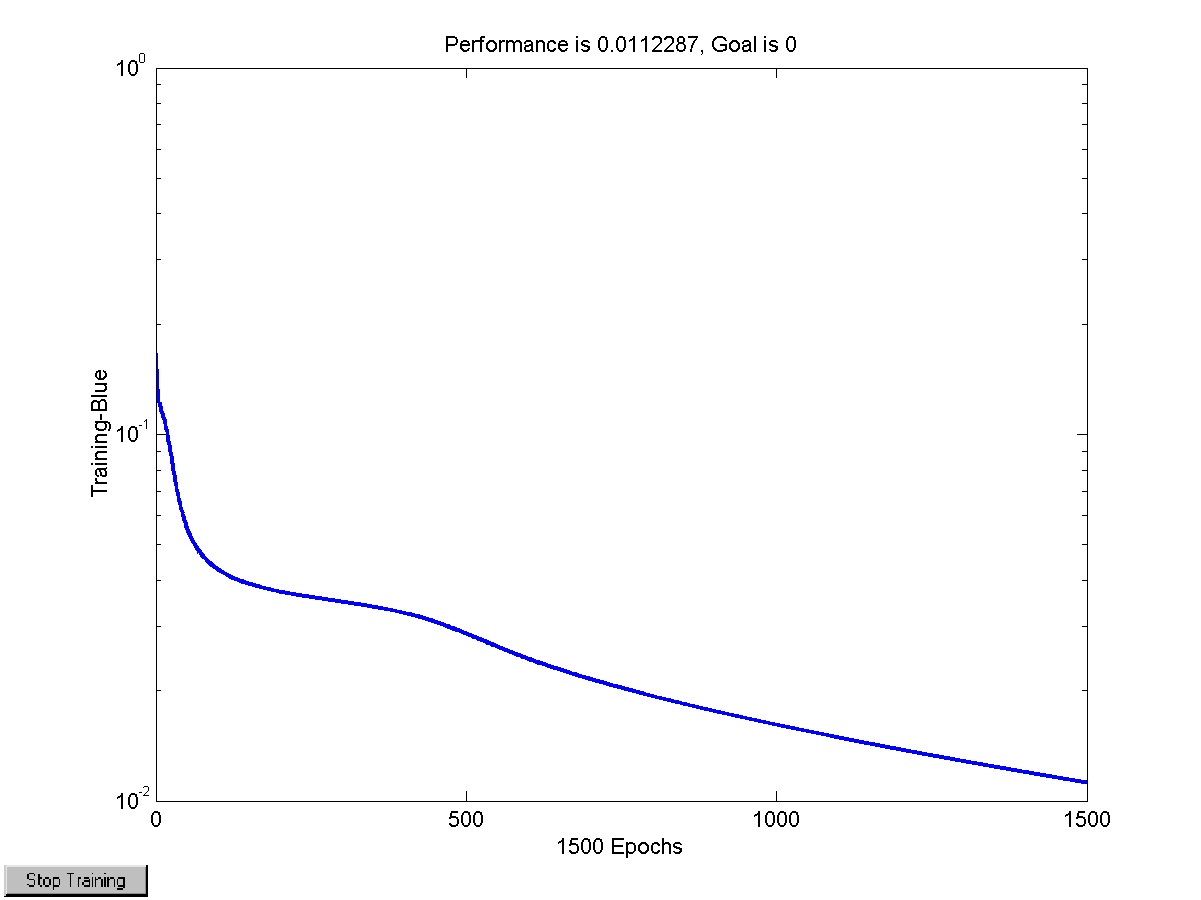

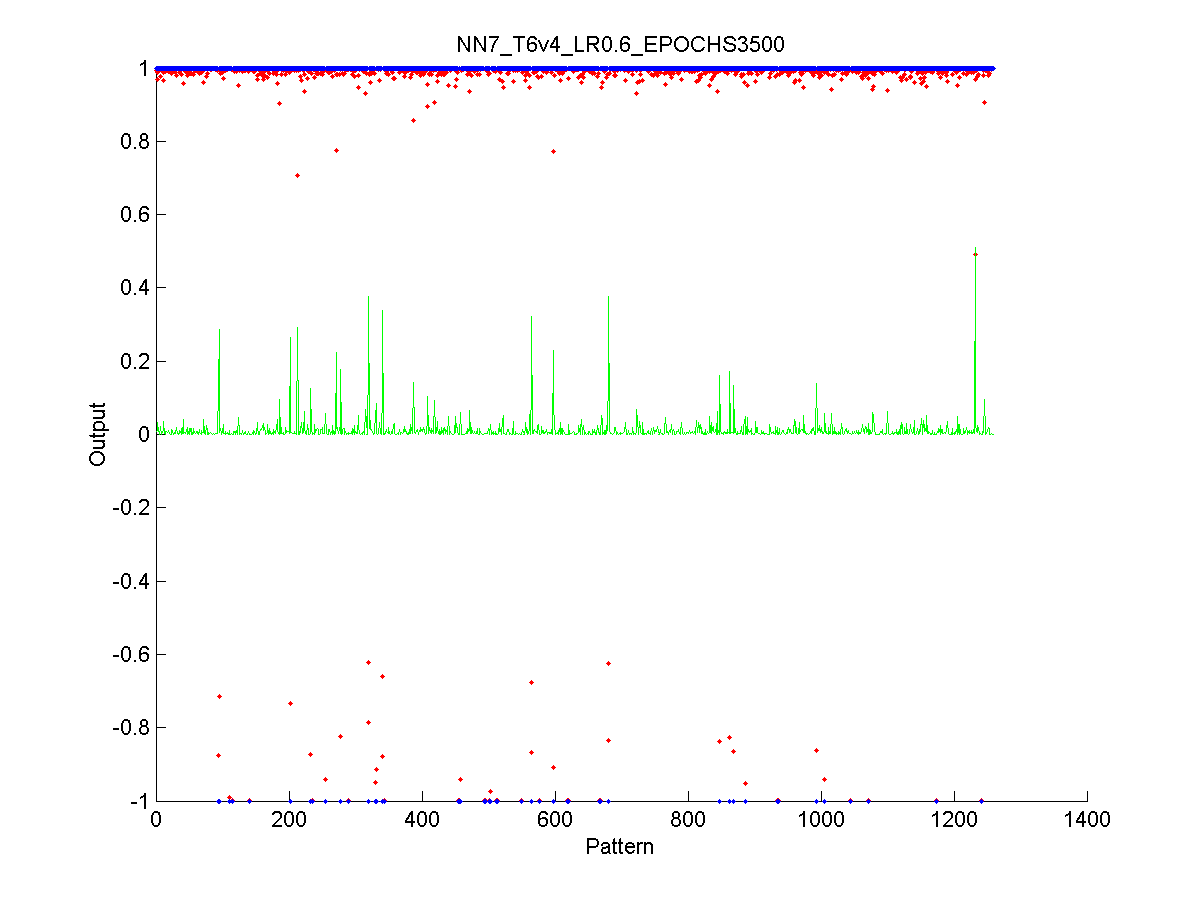

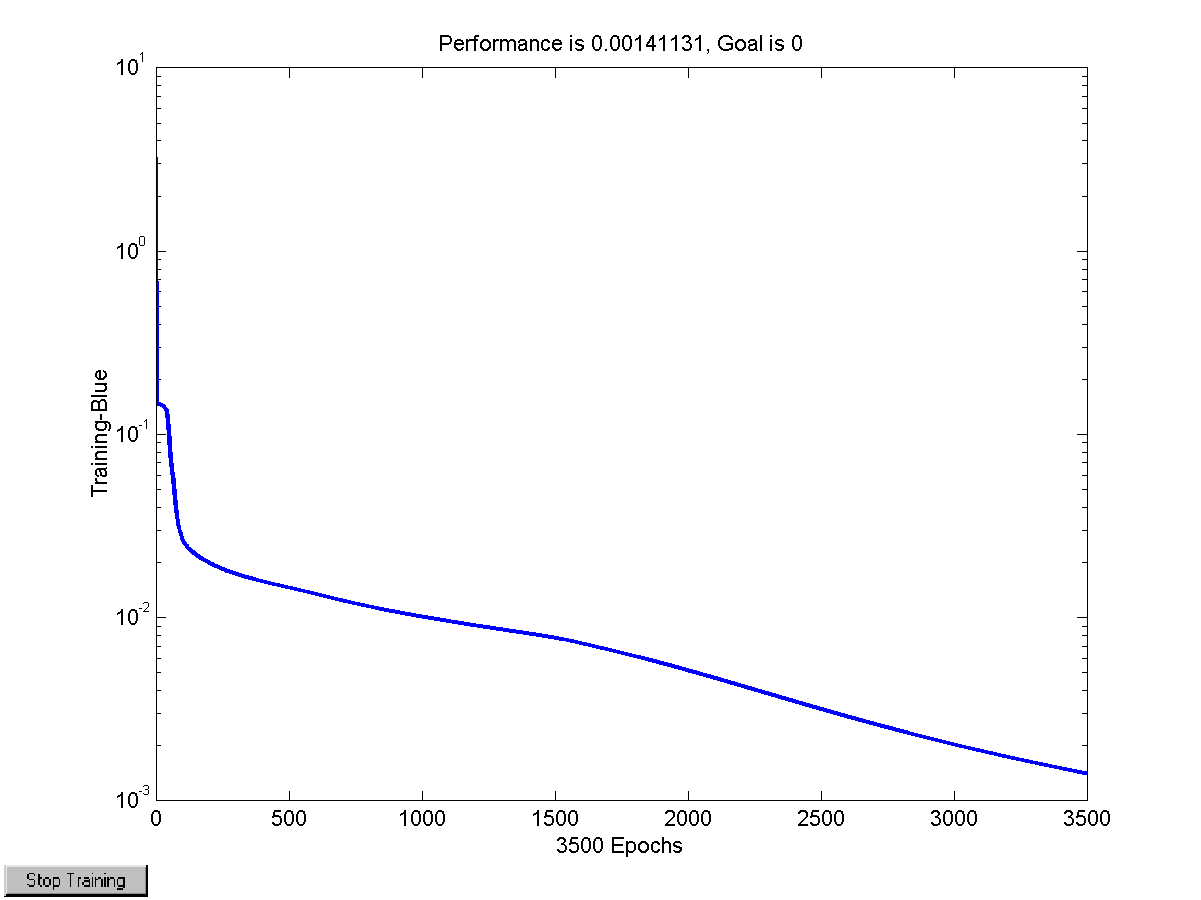

Figure 39 Performance of the training algorithm 107

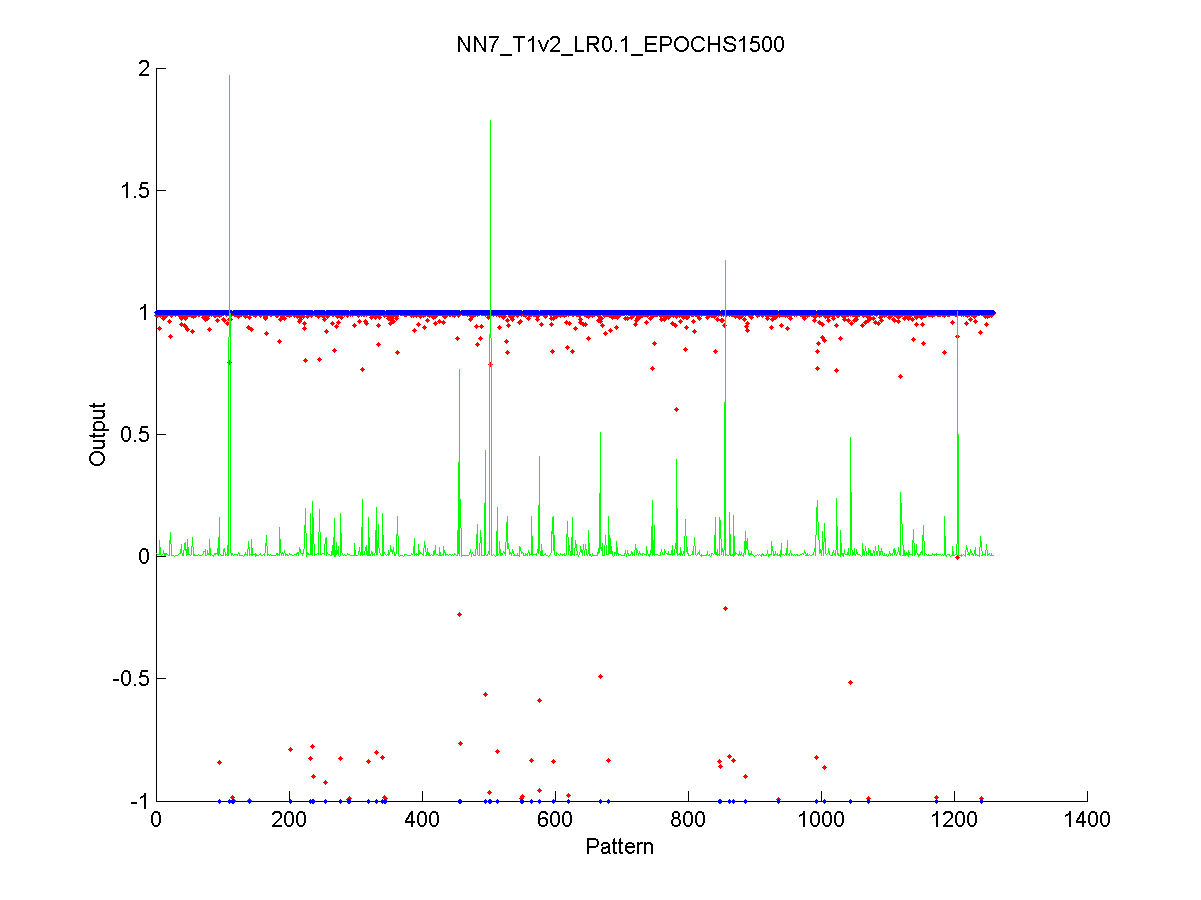

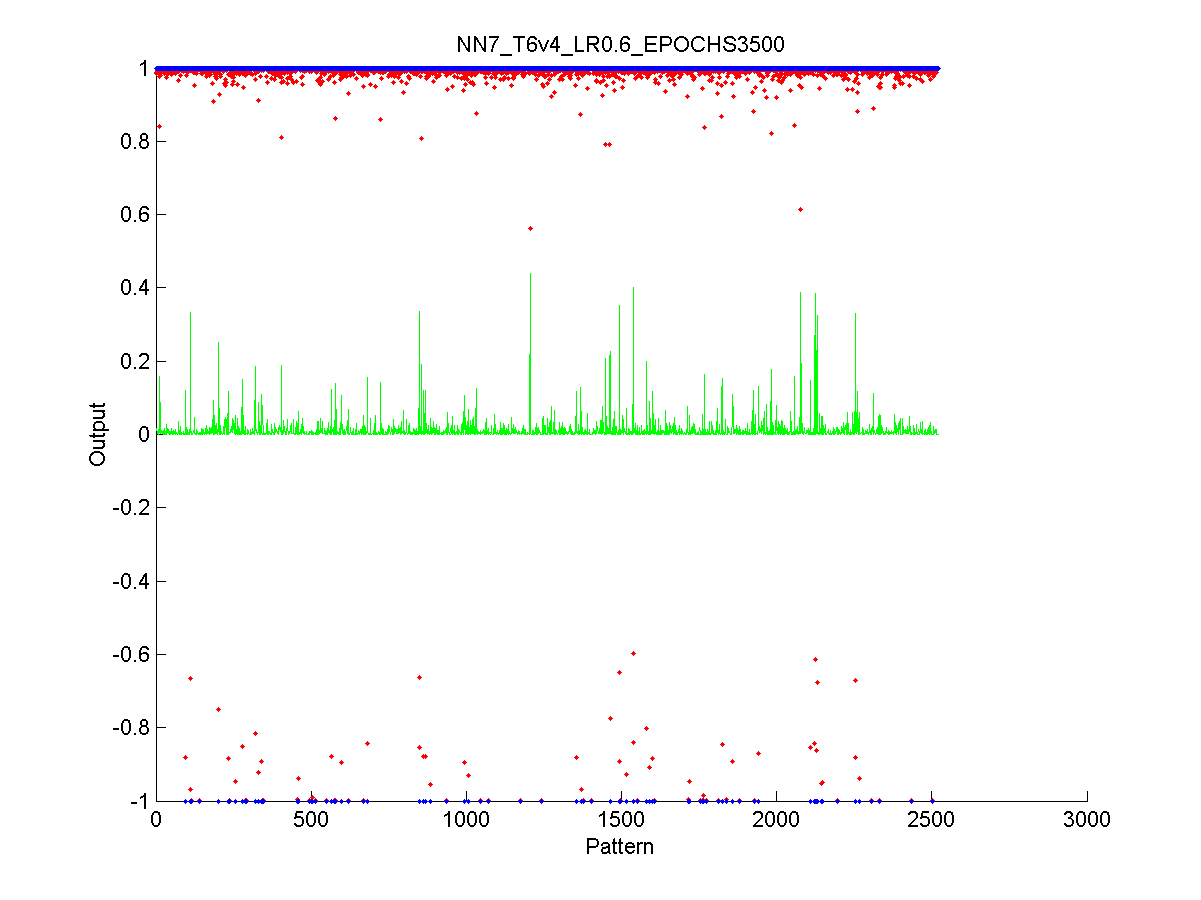

Figure 40 Output of the neural network after the test stage 108

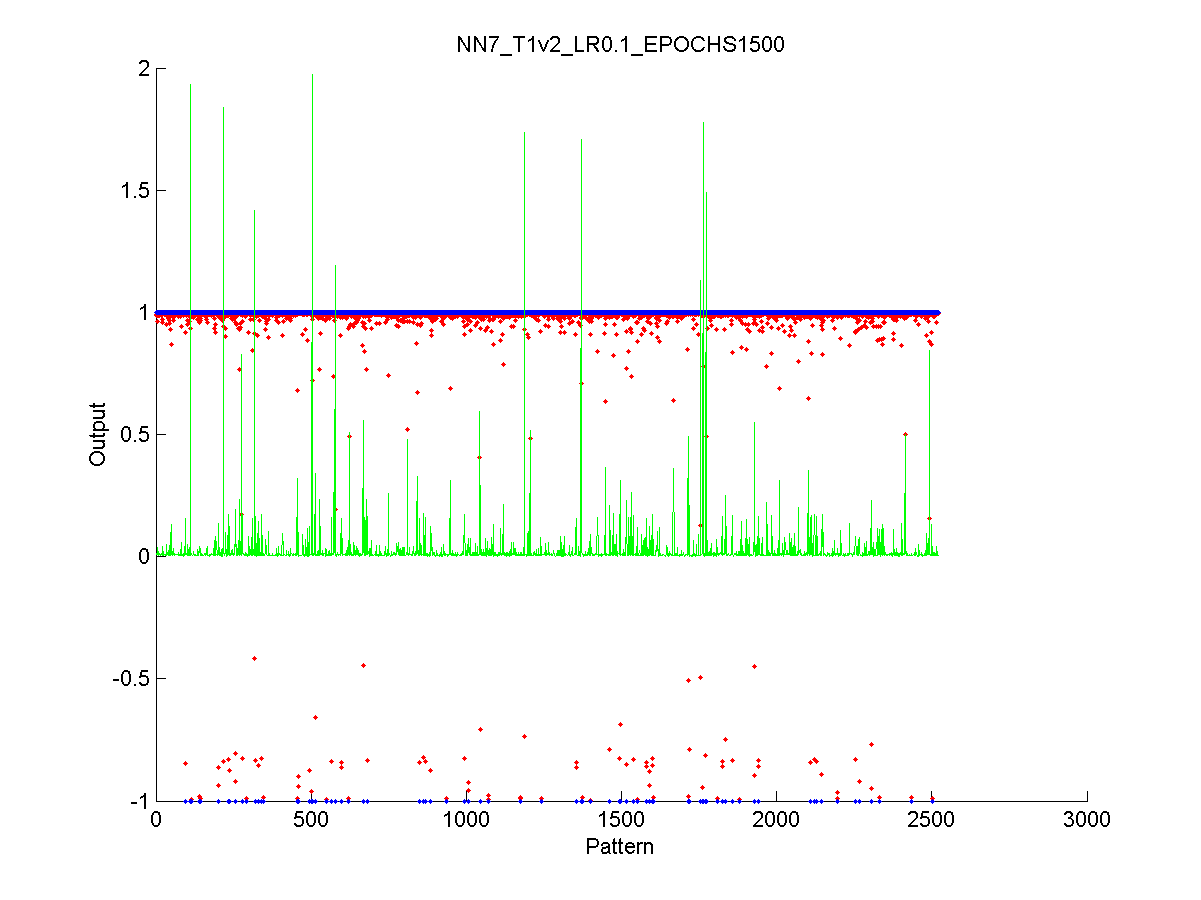

Figure 41 Output of the neural network after the validation stage 109

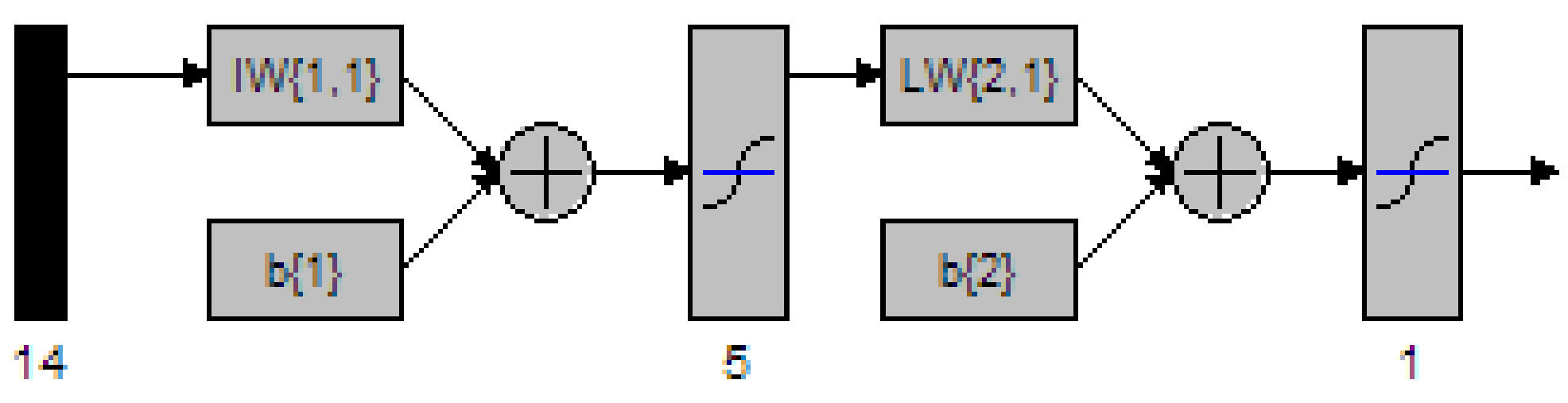

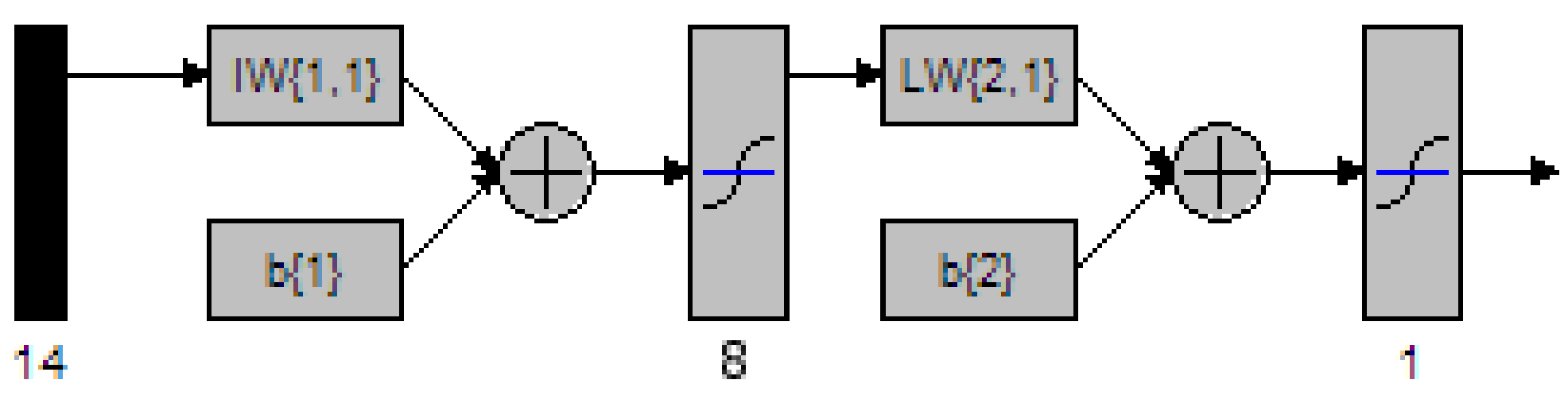

Figure 43 MATLAB depiction of a 2 Layer network with 5 nodes in the hidden layer 112

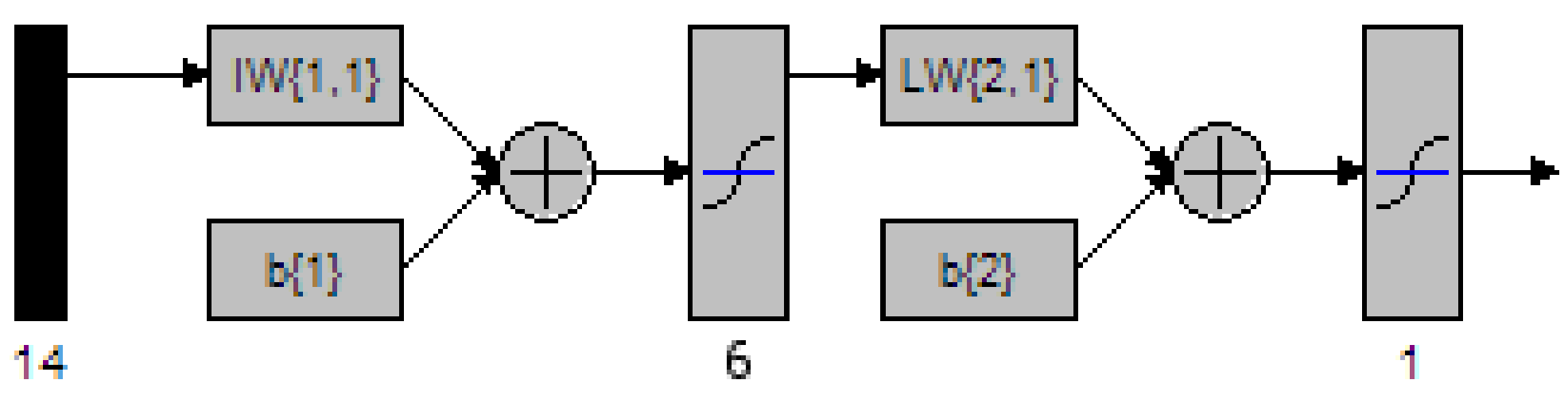

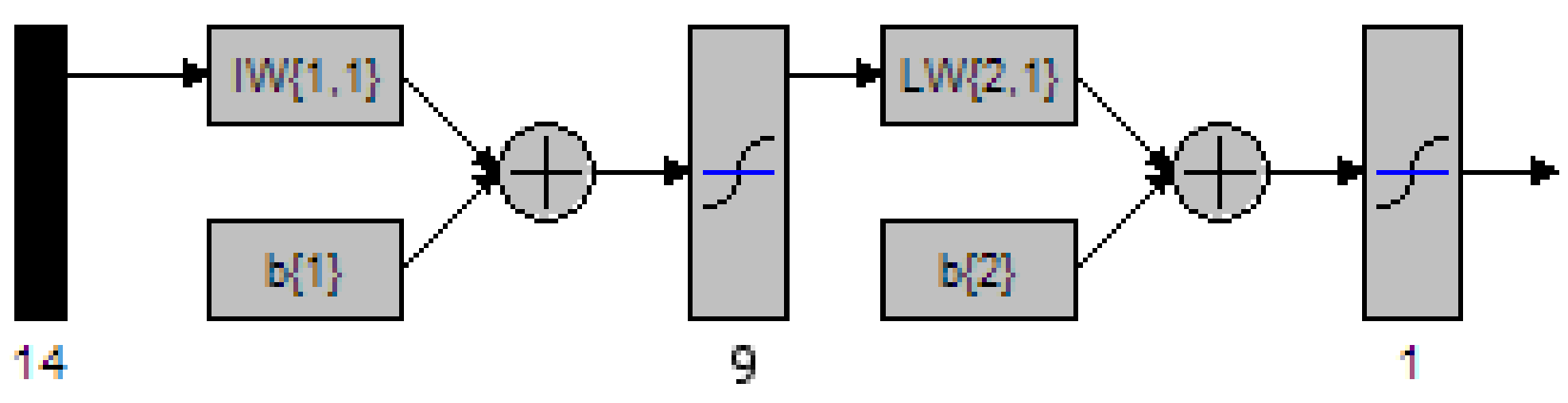

Figure 44 MATLAB depiction of a 2 Layer network with 6 nodes in the hidden layer 113

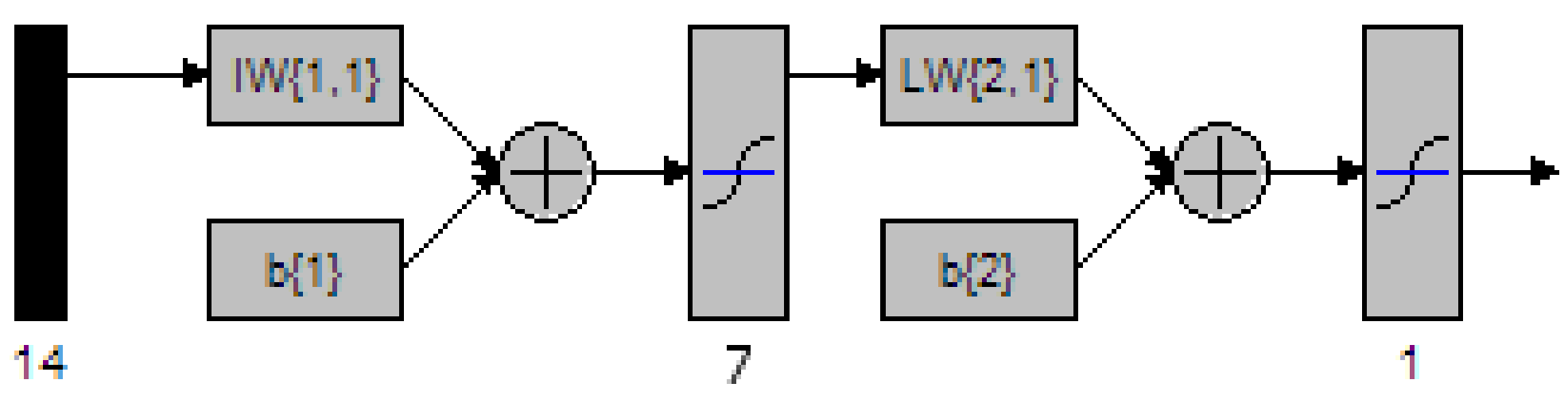

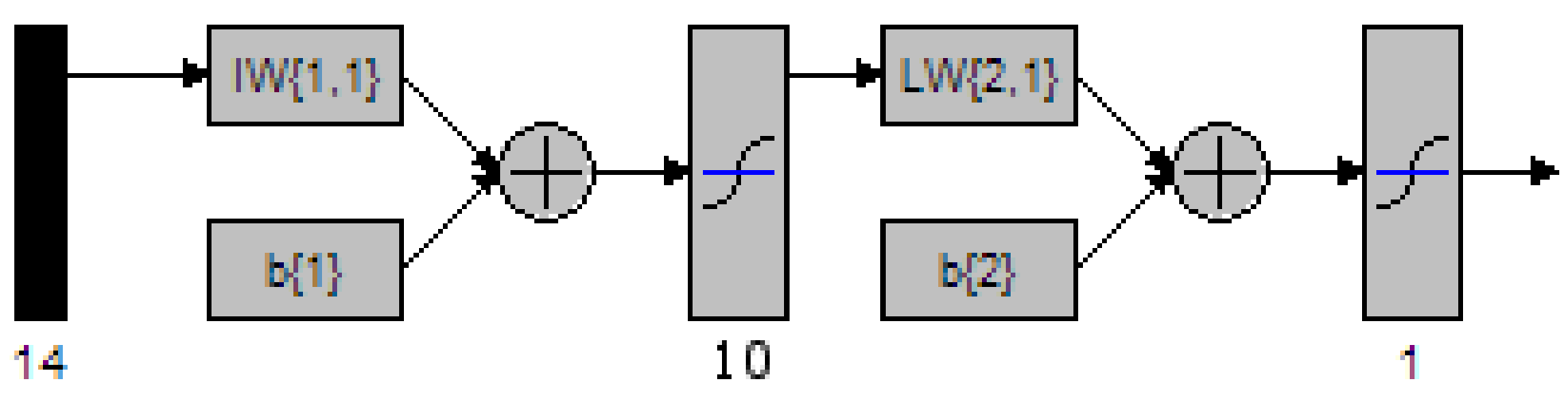

Figure 45 MATLAB depiction of a 2 Layer network with 7 nodes in the hidden layer 114

Figure 46 MATLAB depiction of a 2 Layer network with 8 nodes in the hidden layer 115

Figure 47 MATLAB depiction of a 2 Layer network with 9 nodes in the hidden layer 116

Figure 48 MATLAB depiction of a 2 Layer network with 10 nodes in the hidden layer 118

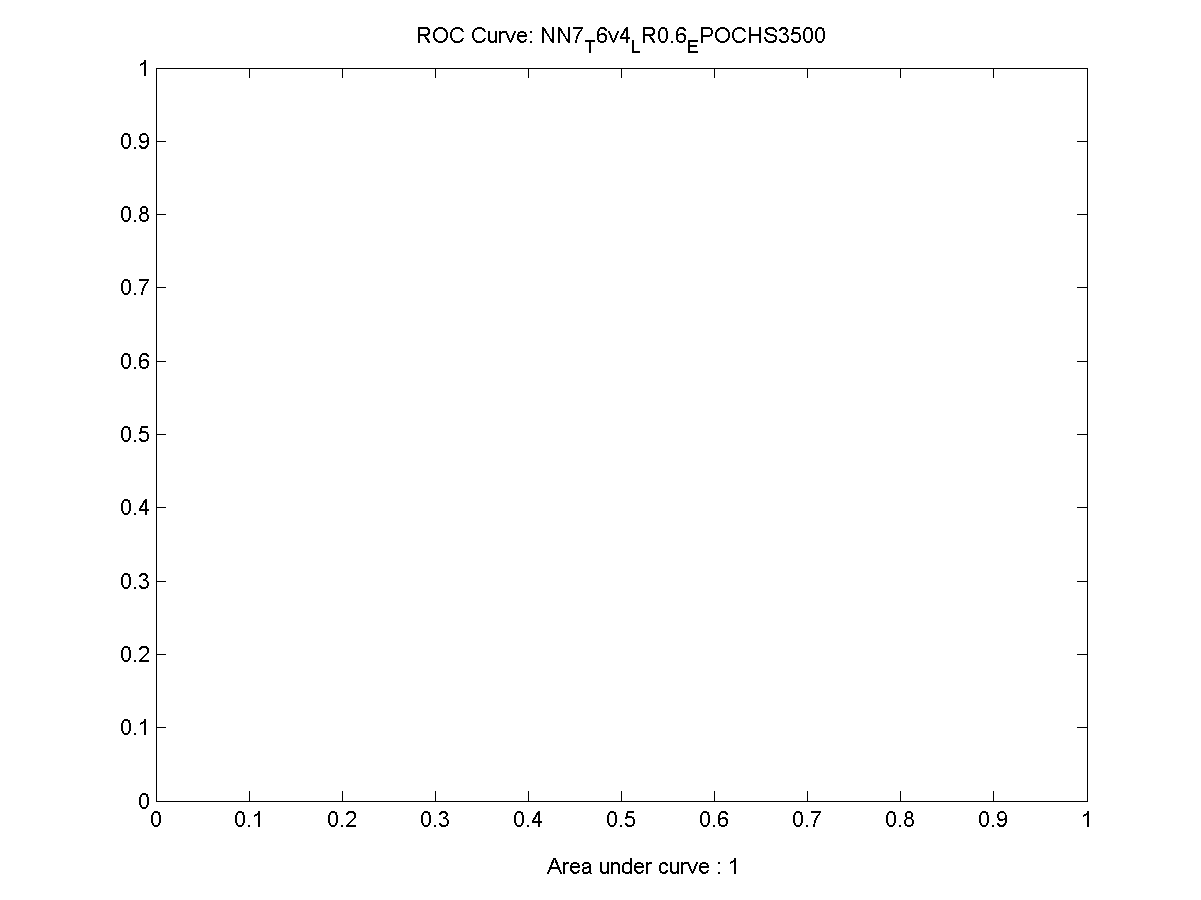

Figure 49 ROC Chart for the best performing network 119

Figure 50 Output from the training data. 120

Figure 51 Performance of the final network while training 120

Figure 52 Output from the validation data 121

4. Glossary of Terms

|

4m's |

The four ms by FMS |

|

Bad Debt |

Unpaid Credit. Up until a while ago fraud was written of as bad debt, however they are fundamentally different |

|

BP |

Back propagation, used in the training of a feed forward neural network |

|

Cell |

A receiver or transmitter which a GSM phone communicates with |

|

False Negative |

Incorrect classification of an event considered to be TRUE; the event is given as FALSE |

|

False Positive |

Incorrect classification of an event considered to be FALSE; the event is given as TRUE |

|

FML |

A Fraud Management Company |

|

FMS |

Fraud Management System (A system used to detect and manage fraud) |

|

GSM |

Groupe Speciale Mobile, also known as Global Systems for Mobile Communication |

|

Internal Fraud |

Someone in the company is using inside knowledge to defraud the company |

|

IP |

Internet Protocol |

|

Means |

The nature of the fraud used to satisfy the motive |

|

Method |

The detailed method used in 4m's classification |

|

MLP |

Multi-layer Perceptron |

|

Mode |

The generic fraud method used |

|

Motive |

The objective of the fraud |

|

NN |

Neural Network |

|

NRF |

Non-Revenue fraud. Intent to avoid the cost of a call, but no intention to make a profit from it |

|

PABX |

Private Branch Exchange |

|

PRS |

Premium rate service |

|

True Negative |

Correct classification of an event considered to be FALSE; the event is given as FALSE |

|

True Positive |

Correct classification of an event considered to be TRUE; the event is given as TRUE |

|

UMTS |

Universal Mobile Telecommunications Service |

5. Introduction

The project aims to detect fraud in the telecommunication industry from the perspective of the customer and the telephone calls that they make. Several different method of detection can be used, but I intend to present one method that I feel is the most suitable for reasons given later in this project. At the end a prototype system will be presented to prove that the chosen method of fraud detection is feasible.

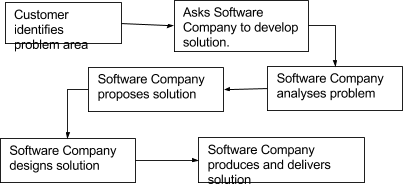

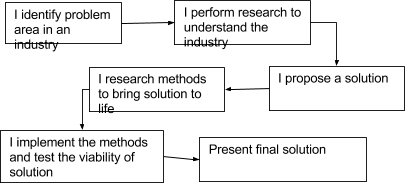

This project differs from the normal software engineering process, where the stakeholders would be identified. Requirements gathered from the stakeholders, with research into the system then taking place and the design processes following from this.

Rather it is an investigation into the how fraud occurs in the telecommunication industry and how it can be combated, with the added slant of a prototype system being implemented to show that a particular method can be used successfully to detect fraud.

Essentially I have identified a problem in the telecommunication industry, and after researching the problem area, I will propose a system that could be developed and produce a prototype of a system to show if it will work or not. It is not a case of building one prototype however, due to the nature of the prototype many will have to be created and empirically tested to find which prototype is the best performing .

This type of software engineering process, might be used for instance with a start up company or new business venture. They have found a market niche and they think they can exploit it by solving the problem. What is then required is a system of research and prototype development, if the prototype is not successful then it maybe that their current theory is not valid and a new direction of attack is needed.

The next chapter will include a brief introduction to the risk involved in this project and a summary of the research that I have done to make this project possible.

6. Risk

Often software engineers talk about inherent risk in each of the projects they undertake. This project is no different, even though the slant of this project is slightly different to what would be considered a "normal" software engineering project.

Pressman highlights eleven key components in overall risk for a project; however only a few can be uniquely attributed to this project 1 :

- Is the project scope stable?

8. Are the requirements stable?

10. Are there enough people on the team to complete the task?

As can be seen each of these key risks are associated with man-power and time taken to complete the tasks. If the project requirements are not stable, then the likelihood of a successfully completed project is minimised, since it is obvious that the requirements gathering process will be failing, thus indicating that the customer will not get the product they wanted. In relation to

Additionally, if the project has varying scope it means that the project will not satisfy the requirements which it was originally intended to.

The two highlighted risks can also have an adverse effect on the number of people needed to complete the task. The longer it takes to tack down a suitable product with stable requirements and a well defined project scope, then the more people the software development team are going to need to be able to successfully complete the project.

7. Research

7.1 Chapter Summary

In this chapter, various methods to detect telecommunication fraud will be investigated. This meant that I had to understand the telecoms industry. From this, I discovered that the telecoms industry is massive, with many different sectors; therefore a tool to detect general fraud is impractical for a project. This led me to focus on a subset of the industry. Further researching into this sub-sector, I found again that there are many different fraud types and methods to detect such fraud. Therefore I decided to further refine the category of fraud I was looking for.

Once I had decided on the type of fraud I would detect, it was important to understand the methods used to detect the fraud. It became known that the most suitable solution for me is to create a Neural Network based solution for reasons established in the following section.

7.2 Investigation into the Telecommunications Industry

The telecommunication sector is a huge arena. Each area of the sector covers a vast domain of communication. Identified below are several areas in which the telecommunication companies operate. These are mobile phone telephony, traditional land based communication, data transfer and the Next Generation mobile services.

7.2.1 Mobile Phone Telephony:

The phone system that is in use throughout Europe and the majority of the world is a standard called GSM (Global Systems for Mobile Communications). Each mobile phone registers itself to a "cell" (hence cellular phone) with which it can communicate by broadcasting over the airwaves to it's cellular base station, which will then essentially form a traditional circuit switched network with the destination 2 .

Traditionally cellular services offered have been more expensive than fixed line services, but are of similar nature and hence when setting up customer accounts services similar processes are adhered to; and accounts have to be paid for in a similar way. i.e. via a contract in which payment is required at the end of each billing period. Normally the contract would include a free phone as part of the deal.

However, more recently prepaid credit schemes are being used where the customers pay "up front" for the services they require and this includes having to buy the mobile phone. Prepaid credit was introduced into Europe in early 1996 3 as a method for the telephone operators to reduce the risk of having "bad credit" users on their system (people how have failed credit checks due to issues such as late bill payment). The system follows the same principle as the prepaid card schemes that have been used on public telephone systems for many years. The user buys a certain amount of "talk time" minutes from a retailer and inputs this into their mobile phone. The telecomm company is then aware of the credit available to that customer. Once the customer has used up all there credit, the phone will become unable to make out going calls (expect for emergencies and credit top up). This has been extremely popular with the teenage market, where contracts for mobile are not possible.

7.2.2 Fixed Line Telephony:

The traditional bread bearer of the telecommunication industry, with nearly every house (95% for 1999-2000) in the UK 4 having one or more telephone lines. Over these, normal voice traffic occurs, but in the last 10 years substantial increases in Internet Traffic, as many households get wired on to the Internet and drastic increases in daily use of the Internet (October 2002 reported that 45% of households have access to the Internet) 5 , have forced the telecommunication operators to reconsider the pricing structures they offer for their customers.

The services of a fixed line system are normally contract based, with the bill being settled by the customer at the end of each billing period.

Traditional operation of fixed line telephony is based on circuit switched networks. Which when a call is started, the local switch at the telecommunication substation makes a circuit (possibly via other switching stations) with the remote switch, which in turn rings the dialled telephone number. This circuit is then maintained for the duration of the call, and all information follows one fixed path to the destination 6 .

7.2.3 Data Transfer:

Initially data transfer services consisted solely of a carrier such as BT, providing a permanent connection to the Internet or between a companies' network. Essentially a dedicated communication line is being placed between both of the ends. Heavy contracts between the provider and the customer are drawn up and depending on the contract, in which payment terms can consider the quantity of data transferred as well as the speed of the line and what it is being used for 7 . It must be noted that BT normally provide the communication infrastructure, with other companies acting as partners reselling the service. This was initially put in place to stop BT becoming a monopoly 8 . These services where expensive, and designed mainly for the corporate sector. Because of leased line pricing structures and the work which needs to be carried out to connect customers to BT networks, leased lines were never meant to be available to the general public.

Other data transfer technologies exist and are coming to the forefront; ADSL (Asymmetric Digital Subscriber Line) and DSL (Digital Subscriber Line) are designed to operate over normal twister pair copper cable and thus are potentially available to every home in the UK. With the recent introduction of broadband Internet access services such as ADSL and DSL, providers have had to put in place extra facilities to handle the increased traffic, as they are responsible for the routing of the data on to the Internet.

7.2.4 Next Generation:

This is where the distinction of services differs from tradition mobile and fixed line services. Next Generation services more commonly known 3G are systems offering services such as video conferencing, video on demand, broadband Internet access across the air waves and are just some of the facilities that telecommunication companies are gearing up to accommodate.

The technology that 3G communication operates on is similar in nature to the method of current GSM, in the sense that each handset communicates with the base station in its cell; however, it uses a new communication protocol called UMTS (Universal Mobile Telecommunication Service). UMTS communicates on different frequencies and in a slightly different method to GSM, which allows vastly supplier data transfer rates 9 with the added advantage of allowing the mobile telecom companies a smooth transition between technologies.

Unfortunately for the telecom companies they invested a lot (£billions) of money in to acquiring the licenses for the use of the frequencies required by UMTS, so take-up by consumers may be slow as the telecomm companies may want to recoup some of their cost by heavily charging early adopters for use of the services. 10

Each of the above areas, have very similar sub-sections that when combined provide the final service to the customer (with the exception of data transfer services).

- Subscription: This is the initial contact that the telecommunication operator has with the customer. They will establish and verify the details of the customer. Once completed, the company will move on to the next stage of the process. This process will only happen once per client.

- Activation: Once the customer’s credentials have been verified and the subscription process has been completed, the customer will be set up on the network. This process may involve an engineer being used to create a connection at the user’s premises, or in the case of a mobile phone, the SIM card being activated. Like the previous stage (subscription), this should only occur once for the customer.

- Customer Use: The customer has been set up on the company’s network, and will be allowed to use the service with in the limits of the agreed parameters, such as credit limits and usage agreements. This will be established at the start of the contract, but will run throughout the lifetime of the agreement and depend on the any renegotiations of the contract.

- Billing & Payment: Coinciding with the “Customer Use” is the Billing of the service provided along with the payment, this could be seen as two separate sections, as they require both parties cooperation. The company will invoice the customer for the use of the network at set intervals (monthly, quarterly etc) outlined in their agreement. The customer is then expected (required) to pay for the services that they used in a timely manner set out in their contract.

Figure 1 Process of a customer of a telecomm company

- Termination of service: Once the contract has either been revoked by the operator or ceased at the request of the customer. The telecommunication company must issue a final invoice and then terminate the user's privileges for the system.

The previous processes (figure 1) will only occur once per account item, such that if the user request a new line or additional services, then the above steps will be repeated and will generally pursue the same structure.

Two important very important areas are in the previous process (figure 1); customer use, and billing. Whenever the customer uses their phone, information about the call parameters is logged; using this information the customers bills are calculated. Information is normally logged in what is called a CDR (Customer Detail Record or Call Detail Record, both of which can be used interchangeably) is as follows:

- Customer Number or ID

- Destination Number

- Call Type (PRS, International, Local etc)

- Call Start time

- Call time type (off-peak, on-peak)

- Call End time

- Duration and final cost of the call

The secondary bullet points are by-products of the parent point and are also sometimes a culmination of other points. For instance the final cost of the call, is a combination of call type, time of call and duration of the call. These by-products maybe generated at the time of the call so to speed up generation phone bill when it comes to the end of the customer billing period, or it might be generated when the bill is being worked out. The later requiring less storage space in the companies calls logs.

Other telecomm companies are another major source of revenue for a telecom company, they use a process called "Interconnection Charging". The telecom company will charge each of the operators for every call originating on the competitors network that is being routed to their network. For instance, BT will charge NTL a set fee for each call originating on NTL with a destination on BT. This practice is very common between mobile phone operators, as well as fixed lines operators 11 .

Now that the levels of service that the companies offer has been established, it is important to decide which areas that this project will concentrate on; this is because of the differences in the core services. An example, ADSL broadband accounts will not operate in the same way as mobile telephone accounts operate, thus the business processes and the implementation will be very different.

This project will focus on detecting fraud that can occur with circuit switched based communication methods, particularly call based systems not derived using IP solutions. In the next sections, topics will be covered with the emphasis on Fraud occurring in the following sectors:

- Mobile;

- Fixed Line,

Bearing the above market sectors in mind it must be noted that when detecting fraud for both sectors, only attributes that are present in both sectors can be used as indicators of fraud.

Common attributes of both Mobile and Fixed Line telephony in particular are the types of calls that take place. A mobile user will make calls to other mobiles, fixed lines (local and national), international numbers, free rate numbers, Premium Rate Service number (PRS). The same can be applied to users of fixed line services.

However when considering items that are dissimilar in both the technologies, issues such as when a mobile user makes a call, the current cell that it is in is also recorded. Obviously this is of no use when analysing call data for fixed lines.

7.3. Investigation into Fraud

Fraud on its own can be defined as "an intentional deception resulting in injury to another person" or "a person who makes deceitful pretences". Some useful synonyms can also be used do describe fraud [con, swindle, racket, hoax, scam, deceit, deception] and what a fraudster is [impostor, pretender, fake, faker, role player].

Fraud in general is a very broad subject, but can normally be boiled down to one easy description; "The need to make money". Fraud can be committed in many ways, for many reasons other than just "The need to make money" making many different people from all lifestyles, susceptible to fraud. Other reasons include crackers wanting kudos from their peers (breaking in to a system and taking information or money); people wanting to save money rather than make money, the list continues.

7.3.1 Who suffers from fraud?

In the end we all do, for instance: Fraud in the insurance industry due to false claims, can increase every customers premiums; Fraud in the financial industry, can mean higher rates of interest on things such as mortgages, loans and credit cards while also reducing the interest rates for savers; Fraud in the telecommunication industry can result in higher call bills. All because the companies that are being defrauded, still need to make money, so any loses due to fraud are normally passed on to the consumer.

Fraud against the individual is also another topic that needs a brief discussion. Fraud against the individual can take many different guises: A street seller may "persuade" a person into donating money to a dying child; A phone scammer may persuade people to part with their credit card details for a fictional product; or an email may dupe people into depositing money into a Nigerian bank account with a promise of returns far greater than those given.

The physiological effect of fraud as well is unmeasured but considerable. It is easy to see that if an individual (rather than a corporation) has been defrauded, a once normally confident person can easily be transformed into a person who no longer trusts his or her own judgement. Other than the financial difficulties induced, the fear of criticism from peers is also high, since perhaps the subject had to request to borrow money from a family member or business colleague. The increased fear of the parties finding out may result in anxiety, guilt, and fear of being held responsible. Possibly culminating in depression. 12

For both types of fraud (Fraud against a corporate and fraud against and individual) the number of different styles of fraud is uncountable. When the companies or law enforcement agencies think they have the hatch battened down on fraud, another scheme for the fraudsters presents itself and the cycle continues.

7.3.2 Who commits fraud?

Now that we have established a reason why fraud is committed, we must also ask what type of person commits fraud.

The type of people that commit fraud can be broken down into at least two categories:

The Opportunists; The opportunist commits fraud as a one off. Word of mouth may spread that a particular company is susceptible to fraud using a certain process . For instance, obtaining a loan by using false details. Or faking an injury to obtain more financial aid from an insurance company. The frauds in this case are normally committed by normal people who essentially want to gain a quick buck.

The Crime syndicate; The crime syndicate will normally commit fraud, to provide money for other crimes such as drug trafficking. They will hit a service for all the money that they can get. The people who operate theses systems, unlike the opportunist are very professional and will always be looking for new methods to defraud people and companies, since it is in their interest to keep providing extra money to the syndicate.

Fraud is unstoppable, even when an avenue to fraud has been closed, another will present itself; and as the fraud detection systems get more complex, the methods used to defraud people will also become more complex.

7.4 Investigation of Fraud in the Telecommunication Industry

When establishing what "Fraud" is in the Telecommunication Industry it is important to understand several questions.

- What is Telecommunication Fraud?

- What does Fraud mean to the Telecomm companies?

- How is the Fraud perpetrated?

- How do Telecomm companies respond to fraud?

- Some key attributes which may identify fraud.

7.4.1 What is Telecommunication Fraud?

The Telecommunications (Fraud) Act 1997 highlights effectively what Fraud is in the Telecommunication industry. In broad terms the act states, "To use or obtain a service dishonestly" and including "To use or to allow the supply of a dishonest service" is considered to be fraud. 13

Fraud in the telecommunication industry can be broken up into two major sections. The first being revenue based fraud, and the second being non-revenue based fraud.

Revenue fraud consists of any type of fraud with the purpose to make the individual who is perpetrating the fraud, money. This can be achieved in such ways as:

- Selling high cost International calls to people by severely undercutting the cost that the telephone company charges;

- Calling high rate PRS lines, with no intention to pay for the cost of the call.

Non-revenue fraud is normally fraudulent use of the telecommunication network for reasons other than making money. Motivations for non-revenue fraud include:

- Removing any chance of criminals being surveyed or having phones tapped, by criminal investigation agencies by making illicit use of the network;

- To provide free or heavily reduced call costs to friends and family;

- To show to their peers (other crackers) that they do have the skill to breach the telecomm companies' security.

7.4.2 What does this mean to the Telecomm companies?

It has been reported that worldwide that fraud accounts for approximately 3% 14 of the Telecomm companies' annual revenue. In 1999 the UK alone suffered losses of at least £720 Millions split over the following categories. 15

|

Calling Card |

Cellular |

International |

Other |

Total |

|

$150 Millions |

$100 Millions |

$500 Millions |

$250 Millions |

$1100 Millions |

Table 1 Losses Due to Fraud in the UK (in dollars)

However, this only accounts for fraud that has been detected, since fraud can often go undetected and unreported. Fraud may go unreported or at least unpublished due to the nature of business contracts and customer confidence if the perceived levels of fraud are high in relation to the revenue generated. The knock on effects of fraud and lost income include higher bills as the losses are passed on to the customer, and higher churn rates for the company when more people are unsatisfied with the service and the perceived security that the company offer. Add this all together and it can negatively effect share holders confidence as annual revenue is decreased and expansion is drawn back.

7.4.3 How is Fraud Perpetrated?

Telecomm Fraud can be broken into several generic classes. These classes describe the mode in which the operator was defrauded and include subscription fraud, call surfing, ghosting, accounting fraud and information abuse 16 . Each mode can be used to defraud the network for revenue based purposes or non-revenue based purposes.

7.4.3.1 Subscription Fraud

Subscription fraud occurs when an unsuspecting party have their identity stolen or a customer tries to evade payment. Essentially, personal details provided to the company are erroneous and designed to deceive the company into setting up an account. Reasons for this may include a customer knowing that they are a credit liability due to CCJ's or other credit problems; or a fraudster needs to obtain "legitimate" access to the telecomm network to perpetrate further modes of fraud.

Subscription fraud causes serious financial loses to the telecommunication operators, but in many instances may not be attributed to fraud. If someone does not pay their bill, then the telecomm company has to establish if the person was fraudulent or is merely unable to pay. This may result in a lot of subscription fraud being classified as bad debt. The BT Group in 2001-2002 estimated that bad debt cost the company ~£79 million. 16

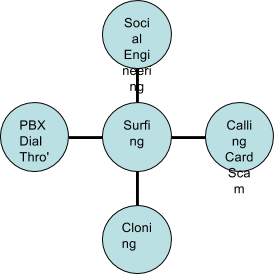

7.4.3.2 Call Surfing

Call Surfing is when an outside party will gain unauthorised access to the operators network through several methods such as call forwarding, cloning, shoulder surfing.

Call Surfing can include gaining access to a company's PABX (Private Branch Exchange) either via social engineering, or by lack of security. Social Engineering could be considered as: A person rings the company's telephone administrators claiming to be a BT engineer performing a line test, they ask for the password so that they can negotiate access to the call-back of the PABX; or a employee in a large company receives a call from a person claiming to have got the wrong extension, and requests if they could put them through to extension 901, with 9 being the external dialling code of the PBX and 01 being the international prefix. 18

These may be unrealistic scenarios, but it is all too easy for someone to gain access to a system this way. Once the cracker has access to the PABX, they can use it to forward calls internationally or to premium rate service lines. All they pay for is the cost of the call to the company, while the company picks up the cost call to the proper destination. The cracker may even escape paying for the original call if they covered their tracks, for instance via subscription fraud.

Cloning of mobile phones is another issue that will arise, especially since the early mobile phones operated on analogue with the signal emanating from the phone being easy to detect and read, and thus the technology used to identify each phone uniquely was susceptible to being read. With this information, the fraudster would be able to reprogram one of their own phones to match these unique details. Once done, the con artist would be able to use the phone to make all the calls that they needed without the original phone owner knowing (until they get the telephone bill that is). 19

7.4.3.3 Accounting Fraud

Accounting Fraud can occur through manipulation of accounting systems and maybe used to help someone avoid having to pay for the service. Normally this is an internal problem. Accounting Fraud would normally occur, when someone would want to try and get cash back at the end of their billing period, or have their bill reduced. 20

7.4.3.4 Ghosting

Ghosting requires knowledge of the internal systems, maybe an employee would set up an account that would not need to be billed or remove billing details from the system. On the other hand, schemes may involve creating a piece of tone generating hardware that will fool the switch centre into thinking that a call might be a free call, or is operating from a public telephone. Essentially, they are "Ghosts" on the system as there is little or no trace of them ever being present on the network. 21

7.4.3.5 Information Abuse

Information Abuse occurs when an employee can use the telecommunications companies software to access privileged information about clients or systems. This information maybe passed on to third parties and used in further fraud. However, it is not solely limited to this, for instance company A might place spies into company B to find out information about any alliances that company B might have. Again, this is an internal fraud. 22

FML (A Fraud management company) developed a system called the 4m's to help fraud analysts decide if a particular case they are studying is more than likely fraudulent. It can be used to understand where each of the previously (section s 7.4.3.1 – 7.4.3.5) mentioned methods to perpetrate fraud and the reasons for doing so fit in with each case of fraud. 23

The 4m's can be defined as Motive, Mean, Mode and Method:

- Motive: This is the reason why they will commit the fraud. This could range from generating money, saving money, kudos or just malicious intent.

- Mean: Used to satisfy the motive. If it is revenue based fraud, how are they getting their money: by selling International calls at a reduced rate; calling PRS services; using access codes supplied by an informant.

- Mode: This is the generic method used to commit the fraud. Such as subscription fraud or call surfing.

- Method: This is the way in which the fraud was committed. For instance, how the call surfing was achieved.

An example of where this system of classification could be used: A person orders a new telephone line with incorrect identification details, once the telephone line has been installed; the person offers International and PRS calls at heavily reduced rates. Then after the billing period the person vanishes and never uses the phone again.

Fitting the above example into the 4m's classification we can see that the persons Motive was to make money. Their Means was via Call Selling. The Mode was using vulnerability in the telecom companies subscription process (Subscription Fraud) and the Method was using False details with no intent to pay for the services used.

A second example of where this classification could be used is: An employee who works in the calling card printing division sells valid pin numbers for pre-paid calling cards to third parties.

Applying the 4m's classification, we can see that their Motive would be to make money. Their Mean is Facilitation to supply fraudulent access to the network. The Mode is via Information Abuse and the Method was disclosure of pre-paid card number.

7.4.4 How do Telecomm Companies Respond to Fraud?

Telecommunication companies will respond to cases of frauds in a manner that is similar to those used in the financial industry.

The telecommunication operator should have a company wide fraud management scheme, which can be broken down into four discrete steps (figure 2) 24 .

- Prevention

- Detection

- Analysis & Investigation

- Resolution & Reaction

Prevention is the most important, if the fraud is stopped before it happens, the less money a company will lose.

Figure 2 The Fraud Management Cycle

However, if it cannot be prevented the next best thing that the can happen is to detect it either when it happens or in the early stages of it occurring. This will mean that losses will be reduced from what they would have been if the fraud had gone undetected.

Once a case has been detected, analysis must take place to ensure that a customer account is being abused, since if service is withdrawn for insufficient reasons, customers maybe entitled to pursue legal action against the company.

Once sufficient motive has been established, it is then up to the company how they choose to react. For instance disabling the account and placing measures to prevent (stage 1) the type of fraud from reappearing, is the ideal solution.

Unfortunately, the measures taken are normally reactionary, since the fraud has already occurred. The company will receive an indication that a customer account is potentially fraudulent. It is up to the company to investigate the claim. Only then once enough evidence has been established that fraud was taking place with the customer can the telecommunication company can take appropriate action to remove the fraud from the network

7.4.5 Some Key Attributes which may Identify Fraud.

A telecommunication company will look for several key attributes when trying to ascertain if a fraudster is trying to use their network 25 :

- The customer is new to the network, and has requested many features of the phone system straight away.

- The customer has high average call duration and high average calls cost, can indicate PRS or International fraud.

- A customer has a unnaturally low spread of call types (i.e. they are mostly PRS calls or International calls).

- The average duration of the time between calls is very small and differs very little, can indicate auto diallers.

It must be noted again that any of these attributes may not correctly indicate fraud (it could be a legitimate user), hence therefore a human investigator (part of the fraud team) would have to establish if the fraud alert from a fraud management system (FMS) is a valid alert.

7.5 Methods to Detect Fraud

Clearly, telecomm companies will not tell us or the public the methods fraudsters use to defraud their systems. However, it is possible to find some of the methods that the fraudsters use, using a variety of sources such as:

- The Internet is a good source to find information from fraud groups. Unfortunately many of these groups are not about to tell strangers how they can defraud the networks; if one of those strangers happens to be the telecomm company then the methods used by the group will become outdated.

- Fraud Forums are organisations, which are set up to accommodate the combined interest of all the companies in a particular market. An example of this is TUFF (The Telecommunications United Kingdom Fraud Forum). They operate by charging subscription fees (normally so high that only telecomm operates can join; so to allay any hope of a member of the public joining to find out the fraud detection methods are used), and then between their members they will tell each other about experiences with fraud and how to effectively deal with it.

There are several known and established methods of fraud detection in the telecommunication industry. What follows is a discussion in to the methods that I found the industry are currently using.

Telecommunication companies, like financial institutions, employ people to detect fraud occurring within their business domain. The role of the fraud analyst is to find fraudulent use of the services that the company offers. With this in mind we must take note that each investigation costs the company money (for instance, one fraud analyst may be able to investigate ten customers per day). Therefore, if a high number of customers who are considered fraudulent turn out to be non-fraudulent, the company loses money and resources that could have been used to investigate real fraudulent cases has been wasted. It is in the interest of the company to find as many fraudulent users of the service, while limiting the time spent dealing with false positives.

The fraud analyst may apply the 4m's principle to ascertain what fraud is taking place, how it is taking place, and why it is taking place on their network. Once the case is understood, the fraud analyst will be then able to recommend changes to the companies operating procedures, to help stop this type of fraud from happening again.

Figure 3 Roles where an FMS Tool maybe used

Fraud Management Systems (FMS's) are the tools used by the fraud analysts, and their role is pivotal in ensuring that the company detects and highlights as many fraudulent accounts as possible, by limiting the number of customers the fraud analysts have to deal with.

This is especially important in the telecommunication sector due to the shear wealth of data that is generated every time a phone call takes place, it would be impossible for a fraud analyst to monitor every customer account on the system, meaning the task of detecting fraud is almost impossible.

The FMS must provide a substiantially low False Positive Rate (FP) combined with a low False Negitive (FN) Rate. These factors can be understood to mean, a low proportion of cases which are considered to be fraudulent turn out to be clear, likewise a low FN implies a low number of people who are actually fraudulent get past the FMS checks. Obviously you want the system to catch all fraudsters, while minimising the number of people it might wrongly accuse.

It must be noted that a successful FMS is not to be solely used by the fraud analyst; it must also be used elsewhere in the business process (figure 3) and be able to fit into the whole fraud management scheme. Suggestions to which department has control of the FMS include finance departments, security departments and customer care. It is an obvious implication that all groups should have a role in the use of the FMS, especially if there is a company wide policy dictating response to fraud.

Figure 4 Subscription Fraud

At this juncture it is important to specify the type of fraud that the project will focus on detecting. Due to the shear number of different types of fraud available to study, it is important to concentrate specifically a single type of fraud for this project.

Fraud that occurs from the customer perspective, such that a developed system will detect when a customer is making fraudulent use of the operators network with means and method like Call Selling, PRS abuse and other Non-Revenue Fraud. These are normally related to the modes surfing (figure 5) and subscription fraud (figure 4), since either way uses methods to evade payment structure of the network operators.

Figure 5 Suring Fraud

Reports suggest that at least 50% of operators' losses due to fraud are caused by "Call Selling", "PRS Abuse", "Internal Abuse" and "Non-Revenue Fraud" (All three will be collectively referenced as Call Selling from now on) 26 . It is important to note that even though it may be a customer who is caught defrauding the network, it may in fact, be an internal problem, with employees supplying external "agencies" with commercially confidential material.

It is import to find where call selling fits into the 4m's classification and also where it fits into the four stage fraud management scheme. Call selling is normally detected as a by-product of monitoring customer use of the network; and since the fraudulent customer is already on the network, we can say straight away that our fraud management stage 1 (prevention, figure 2) has failed. Therefore, anyone who has been caught fraudulently using the network can be said to have bypassed the subscription fraud detection process, since they would have either applied to use the network with false details, or with correct details but no intention to pay for the services used.

Indicators to an active subscription fraud can be identified by checking that the customer is who they say they are. Checks are normally carried out to identify the background information that the customer supplies are valid; these can consist of voting registrar checks, credit application checks and previous address checks. Systems also exist that can cross-reference a customers applications with customer applications of other companies to find consistencies and inconsistencies between the supplied details.

Therefore, we can go through the four stages of the fraud management lifecycle, and amend the subscription process. Unfortunately detecting that someone intends to defraud the network by checking subscription details is never 100% successful (as they might have used legitimate details but had no intention to pay for the services), so the next process is to detect when fraudulent use of the network occurs. Once fraudulent methods have been identified, the company can amend the system to help detect the use earlier. Since this is always going to a be reactionary process, the earlier you find the fraud, the earlier you can put a stop to it and the more money will be saved.

This is where establishing when call selling is taking place requires a FMS (Fraud Management System), due to the volume of call data generated whenever customer use their phone system. There are several accepted ways to detect fraudulent use of a telecommunications network, these include 27 :

- Matching a user call usage pattern to a know pattern that fraudsters use.

- Establishing that there is sufficient change in a customer's usage pattern to warrant investigation.

- Ascertaining if a customer's usage profile has exceeded set limits defined by the fraud analyst.

Firstly if the telecommunication company is well established, then they are more than likely going to know the call patterns associated with fraudulent use. Therefore, one can assume that if a call pattern is the same as an established fraudulent pattern then the customer account the call pattern belongs to warrants further investigation.

Unfortunately, things are never actually this easy. Fraudsters understand that to be able to defraud the telecommunications companies in the future they must evolve their cunning methods, as they also know that telecommunications companies are not stupid and will spot when particular frauds are occurring. Likewise, the telecommunication companies know that to keep the fraudsters at bay they must constantly evolve their methods of detection and prevention. It seems like an appropriate analogy would be that of a two horse race, with the fraudster always one step ahead, so when the phone operators catch up, the fraudster will step up an extra gear and move ahead again.

Some of the tools that the fraud analysts can use when detecting fraud can be summarised as follows:

- Rules based systems: Based on knowledge obtained from experts in telecommunication fraud, the fraud analysts will create a set of rules that will try to match certain aspects of a customers profile with a set threshold.

- Bayesian Knowledge Networks: A graph of related events is created and between each is an arc based on the dependencies of one event on the another. We could then build up a solution from evidence presented to the network based on conditional probabilities. Unfortunately, this needs a professional in both telecommunication fraud and Bayesian Belief networks. Without going too in-depth this solution has been proven to be less reliable 28 , than other methods.

- Neural Networks: Based on past data, a Neural Network should be able to classify and ascertain if an input pattern matches or has enough similarities to that of a pattern which the network has already learnt 29 .

Rules based systems 30 31 32 : Rules Based system require knowledge of the exact parameters of fraud. In addition, since there are seemingly unlimited methods to defraud via call selling, it would imply that the rule set required to capture the fraudsters would also need to be sufficiently large. This is not feasible considering each check may take a finite period of time, and the larger the rule set the longer the checks will take and for possibly little gain in fraud detection rates.

Imagine that there was a system developed to check customer accounts against 500 rules, now imagine that a group of fraudsters establish a new method to defraud the phone company. After a couple of weeks/months the company becomes aware of these methods and adds new rule to handle to this new fraud, however they have a 500 rule limit and need to drop of some other rules. How do they decide which rules to remove without making themselves susceptible to the older methods of fraud? Do they assume that no one will use the older tricks? That would be stupid, since they would be neglecting the opportunist fraudster who might only know the older methods.

Additionally, rules systems are not dynamic in their nature, they normally consist of checking the parameters of customer accounts against threshold values set up by the Fraud Analyst. Therefore, the rules may miss the fraudsters who have not managed to get themselves to the levels where their call patterns are deemed fraudulent.

Rules based systems are also open to internal abuse, since a person looking at the rule set could easily discern its internal workings. For instance if someone knew that if they kept the average cost of each call below £5.00 then all the fraudster has to do is make sure that their average call cost around £4.50. This is an overly simplistic example, but effectively highlights some of the problems with rules based systems.

Bayesian Knowledge network systems 33 34 35 : The parameters of fraud are know to the telecommunication company based on certain features ascertained from the customer base. The fraud analyst would then set up relationships between each piece of knowledge and associate a probability that given a piece knowledge, how much that particular piece of knowledge influences the event B, the event being in this case is the probability of the customer being fraud. For example given that the average call duration is x and most calls occur in the evening, is the customer fraud?

Systems have been researched that use two belief networks. The first network is modelled by the fraud analyst with the relationship between knowledge being established based on previous fraud that has been detected. The second is a network that is automatically generated from all the clear (non fraudulent) data in the network and a network is normally created for each customer class 36 . The data for each customer is then passed through both networks and results from both networks are considered on containing a belief of how fraudulent a customer is and the second a belief of how clear a customer is.

However, what if the fraud analyst missed some important relationships out when inferring knowledge in the system, how would the system respond? What if the customer was perpetrating a new type of fraud that had never been modelled before? My assumption is that the networks would not be able to respond sufficiently. For instance, if the customer simply never intended to pay for a bill, but the calling pattern was similar to one of average Joe customer.

Bayesian belief networks can be used to generate a better understanding of the customer base, by helping the fraud analyst discover relationships in the data that they might have otherwise missed. Michiaki, Taniguchi states that other methods of Fraud Detection exist which provide higher degrees of true classification rates, with lower false positive rates.

The Neural Network 37 38 39 ; Michiaki, Taniguchi have shown that Neural networks are the better at classifying fraud than the previous two methods (rules based and Bayesian knowledge). Depending on the construction of the Neural Network, rates of 85% classification with out a single mistake have been recorded.

What is a Neural Network? Kevin Gurney states:

A Neural Network is an interconnected assembly of simple processing elements, units or nodes, whose functionality is loosely based on the animal neuron. The processing ability of the network is stored in the interunit connection strengths, or weights, obtained by a process of adaptation to, or learning from, a set of training pattern.

Simply, given an input pattern, the neural network will discern from past training what class it assumes the pattern belongs. Essentially, during training each of the nodes in the neural network build up weightings to specific features presented in the training data.

It can be seen that a neural network tries to imitate the reasoning process of a human expert; where a human would build up an image of the solution by combing evidence and weighting each piece against knowledge against the experiences of similar problems. There may be many factors that a human will use to decide the best solution to a problem.

Unlike rules' based fraud detection methods and Bayesian belief networks, neural network will not need a fraud analyst to establish the reasoning the relationships between customers being fraudulent and the attributes, rather the fraud analyst will need to classify the customer accounts based on whether they think they are fraudulent or not. For the network to be able to classify data with accuracy, the data that it needs to use has too be of a good quality, if no relationships between features of data can be established then it may be unlikely that the network will be able to describe the weightings of the features in its internal system.

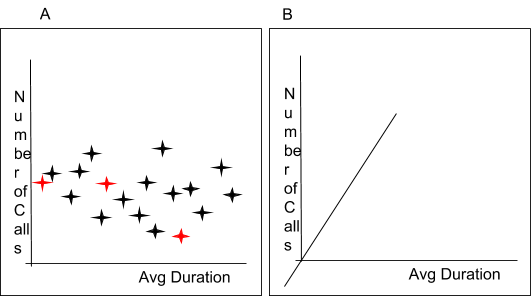

Neural networks are often use in data modelling or statistical analysis in problems where there are many nonlinear relationships. For instance, weather forecasting, financial forecasting and fraud detection. This is because neural networks have been shown to have an innate ability to classify non-linear problems. It may be good to show how this can be inferred in fraud with an example 40 :

If we look at two variables Number of Calls and Average Call Duration, with each point being a customer (see figure 6A), we have no way to draw a straight line between the two classes (fraud –red, and clear –black). Things start to get harder when we add more variables in and the number of dimensions increase (figure 6B) when drawing a hyper plane between the classes becomes nearly impossible.

Figure 6 A) Non-linear problem separation B) Added Dimensions

Neural network technologies are commonly used in pattern recognition precisely because they are good at solving non-linear problems, where there may be a pattern that can be discerned but it is very hard for us humans to see them. The more dimensions we have, the harder it becomes to separate each class of data with a line, plane or hyper plane.

Neural networks offer several other advantages over the two other systems of fraud detection (rules based and Bayesian knowledge). They also have the ability to generalise a solution; that is classify how it thinks a particular customer account is in relation to fraud. The customer account information does not have to exactly match the data that the neural network has been trained on. This can be good for detecting fraud that is not being perpetrated in the same manner as other fraud, but has similar characteristics. 41

Another case for neural networks is their ability to adapt to changing circumstances. Not only do they have the ability to generalise, they can also be retrained (once a sufficient training regime has been put in place) with new data, so if the fraudsters evolve their methods, then the neural network can be easily adapted to accommodate these changes, with little extra effort from the fraud analysts.

Neural networks suffer less from the problems of internal fraud attacks against themselves than other methods of fraud detection do. Neural networks have been considered to be black boxes, you supply data to the network and you get a response with out knowing specifically how the network came to its decision. Rules systems and to some extent Bayesian networks, are susceptible to internal fraud, in that a user of the system can infer the criteria used to establish if a customer account would be flagged as fraudulent quite easily. Because simply looking at the nodes of a neural network will not give any evidence as to how the neural network classifies its data, it would require a professional with masses of experience with neural networks to be able to assume any information describing the reasoning process. Therefore, in this sense the neural network is more secure than other methods of fraud detection. 42 43

Once the neural network has been trained, then the process of reasoning if a customer account is fraudulent, is very efficient. The reasoning process (internally) normally consists of matrix multiplications which can be carried out very efficiently. The most time consuming issue with the solution would be summarising the customer account from a database of all customer call information, which is a standard operation across each of the methods described in this chapter. Once the data has been summarised, it can be presented to the neural network and a response will be given almost immediately. Compare this to having to first summarise the data, and then trawl a rule set and compare each rule against the data. A rules system is effectively systematically analysing every customer variable for an account. This process can be more intensive and thus slower than the neural network method.

7.5.1 Why Call Pattern Analysis is not always enough

Call pattern analysis is not the only method of fraud detection that should be employed in the telecommunication industry. If we are having to capture the fraudsters when they are using the network, then they already have evaded out first check (ascertain who they say they are). Also the fact is that the customer may mimic a normal person, and then neglect to pay the bill after the second month. For instance, if we tie the system into the billing departments systems, we may notice that a person might say they are a company, run lots of international calls through it like a large company might, then close down when payment is due. What is to say that the company did not fold on purpose?

7.6 Consideration of Real Time Methods

Part of the emphasis of this project is to investigate Real Time methods used in Fraud Detection within the Telecommunication Industry.

There are two types of real-time behaviour in computer systems, HARD real time and SOFT real time, these are said to be "Traditional Real-Time Systems".

Hard real-time systems are normally associated with Hardware based systems, where the timing of the responses from the software controlling the hardware needs to follow strict guidelines with respect to response times. Hard real-time systems have to be predictable to ensure that timing of event and response actions are always known and adhered to,

Soft real-time systems on the other hand deal with timing requirements in more of a lackadaisical manner, where events timings are non-deterministic. Thus the programming for such system is said to be more complex than its Hard real-time partner.

A more commonly used meaning of "Real-time Systems" is "the successful achievement of results with acceptable optimality and predictability of timeliness". This is the definition that I intend to use through out this project.

I intend to develop a prototype system that once it has been presented with the relative information regarding customer call details a response will be return near instantaneously, hence the "Real Time" part of the project title. 44

8 Identification of Problem and Specification

A customer would come to the software company with a set of requirements. The development house would then analyse the problem domain, propose a solution using certain formal methods and then if the customer is satisfied, they would agree to the design and the implementation would follow.

This project has taken a different direction; I initially identified a problem in an industry and proposed to find a solution to the problem. Therefore, I have also had to take on the task of the customer. This required in-depth research into the industry. In figure 7 the normal model of systems development has been shown. This method has been amended (figure 8) to accommodate this project. 45

Figure 7 Normal Linear Sequential Model (Waterfall)

From now on, this project joins with what could be considered a normal software engineering project (removing the analysis stage, as it has already been done).

Figure 8 Amended Linear Sequential Model (Waterfall)

8.1 Specification

From the research provided it can be shown that a system to detect "Call Surfing" by methods such as "Call Selling" and "PRS fraud", will help save the telecommunication industry potentially millions of pounds per annum. The proposed solution can be summarised as:

Develop a prototype system using neural networks that will analyse the call patterns of individual customers, returning a status of whether it thinks the call pattern is fraudulent or not. The results of which will ascertain if such a solution is valid.

Using the above criteria as a starting base, we can see from previous research (chapter 7) it is more complex than what is simply stated above. The development aspects of the system can be broken down into the following stages.

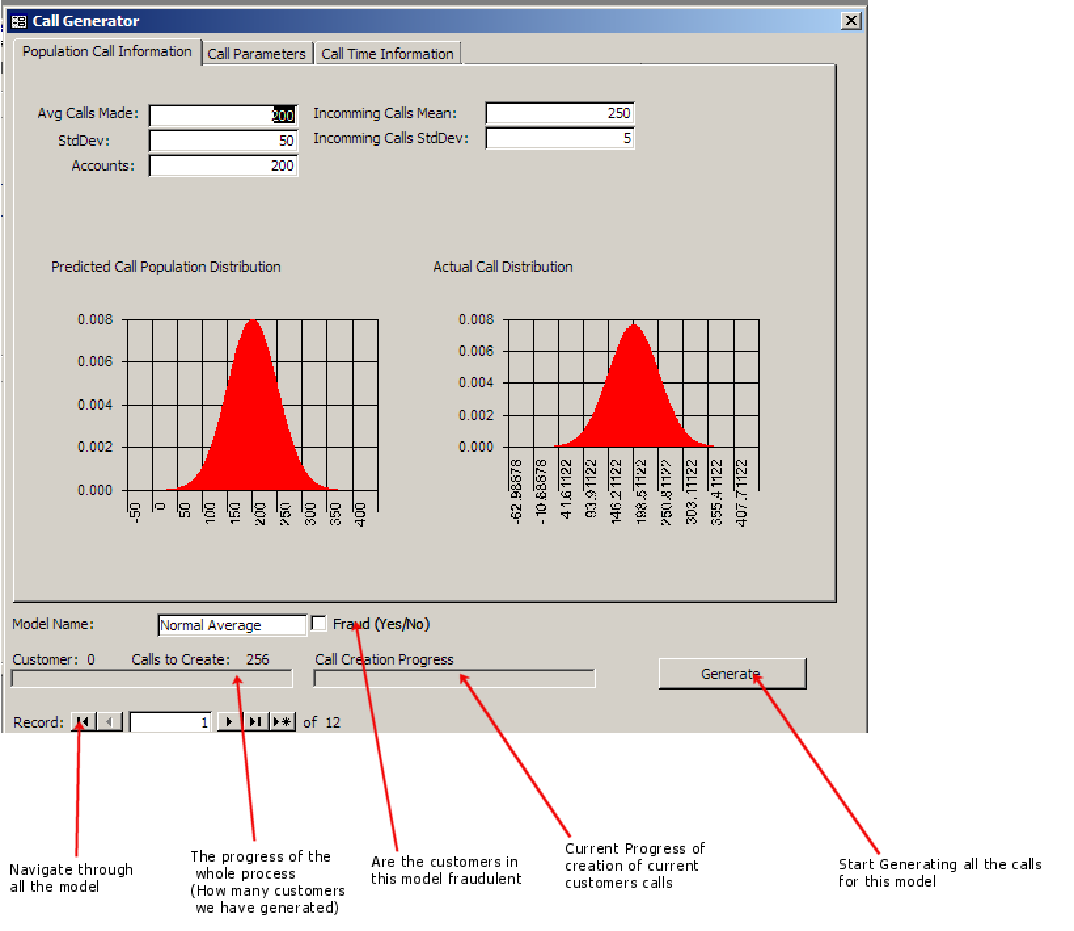

- Develop a customer call generation tool. The tool will model how classes of customers behave given user defined parameters.

- Model neural networks using the generated data mentioned above, with a training regime, testing methods and validation of results.

A customer call generation tool will need to be created as I am unable to obtain any proper call information from telecom companies. The customer call generator will be able to generate all the customers and their calls needed for this project.

8.2 System Tools Research and Requirements

The aim of this section is to understand the reasoning behind the selection of the tools used to develop a system that can detect fraud, as well as a further discussion into the requirements of the project. This is essential since we now know the minimum requirements for the solution and before we can design how the package as a whole will work, we must build up a more concrete set of requirements and we must also understand how the development environments will help and hinder development.

The requirements of the system can be broken down in to two separate stages, one for the CDR Tool and the other for the neural network. The requirements were established by myself to give limits to the project, these limits are then imposed to stop feature bloat and to minimise the risk that the project would not get completed in time. The project would have to meet these requirements to be judged successful.

The requirements were gathered after the research stage (sections 7.2 -7.5) into fraud, the telecommunications industry and fraud in the telecommunications industry. Following on from this, several theories and methods of using a neural network presented themselves as possible solutions to the problems; for various reasons where decided not to be implemented. What therefore follows is the final set of requirements deduced from a subset of all the initial theories. These theories were based of and developed in tandem with the system tools research. (Notes available on request)

Since this project is an investigation, it requires the development of a prototype tool. Prototypes as the name suggests, do not have to be a fully functioning product that it is aimed at the people who are to use it. Instead it is a proof of concept, saying that "Yes this solution is viable and will work using following the principles".

It would be ideal to have development tools that are perfect for the task in hand, however unfortunately this can never be the case, for many reasons not only including a limited range of software the university posses, but the cost of the software that I can afford.

8.2.1 Further Requirements for the CDR Tool and Development Tool Research

When considering the features for a CDR (Customer/Call Detail Record) generation tool it is important to understand all the data that is pertinent to a call. This is needed since the analysis of the data will result in the creation of the detection methods which directly affects the success of the fraud detection tool.

The CDR (Customer Detail Record) Tool must be able to create groups of customers that follow a given model. This implies that the models must be able to be specified in a form where the data can be represented in such away that it makes the model information easy to use from a human perspective, but the format of the data is flexible enough so that algorithms can be easily developed to create the customer information.

Customer attributes will be considered in the design section of this project, as further research is needed to judge which attributes are the main drivers of a customer's account information, while other attributes may be inferentially obtained from the main attributes.

Due to the huge amount of data that will be needed when creating a suitable system to model CDR's, it is safe to assume that a RDBMS (Relational Database Management System) will be needed. The main question is: What type of RDBMS should the project use?

Points such as interoperability with programming tools, data extraction facilities, and performance must all be understood.

It is widely considered that SQL is the de-facto standard for information extraction from an RDBMS, so there is little argument that a tool must be able to communicate directly with the database using this Declarative language. 46

Figure 9 Standard model for database communication

Tools for each stage (Generation of data and Fraud Detection System) must be able to communicate with the RDBMS (figure 9). It is here where a remote communication protocol called ODBC (Open Database Connectivity) developed by Microsoft 47 should be highlighted. ODBC allows any program to access RDBMS's created by many different vendors, with little or no need to alter the client application if databases were to be changed during the project. ODBC also removes the distinct of where a RDBMS is physically located, as it does not require the client application to implement any network communication protocols.

Because of the decision that ODBC will be used, we are essentially free to choose whatever RDBMS is available. The options for RDBMS are as follows, but not an exhaustive list of all the database systems available to use:

- MySql, a highly used, efficient multi-user open source RDBMS, used on many websites throughout the internet. However, several failings remove this choice of RDMBS, from the running. These include (at the time of assessing the requirements) no support for sub queries (link), limited join facilities (link) and no support for SQL views. 48

- PostgreSQL is a heavy weight multi-user open source RDBMS alternative to Oracle. With excellent performance and uptime, inclusion of its own SQL style procedural language to enable easier data manipulation, and competent ODBC drivers. Has the ability to run in a windows environment, but still requires ODBC to connect with it. 49

- Oracle, a heavy weight business class RDBMS with excellent performance and scalability. The likely feature set required for this project will not cover even ½ of the available features that Oracle offers. Oracle has had for many years its own data input language called Oracle Forms as well as it's own procedural language. While I have worked with Oracle in a professional environment, it is judged that for this project its functionality is an overkill. Combine this with the fact that the Oracle DB will always reside in the university servers and access to such services may for some uncontrollable circumstances become unavailable. 50

- MS Access 2000 is a business orientated RDBMS, although it does not support many of the higher end features of some of the other commercial databases such as efficient multi user support.

MS Access 2000 has its own implementation of VBA (Visual Basic for Applications), which supplies a far superior interface and development language than the other RDBMS mentioned through the use of Windows forms allowing for easy prototyping and application development; partly due to the ability to model, control and access the data types and the underlying data store with no extra work. 51

The ability for MS Access 2000 to have the CDR generation tool sitting directly on top of the RDMS is a tremendous advantage. As keeping everything in one location will enable me to develop the software in more than once place, rather than establishing connections to remote databases which could prove to be cumbersome, slow and prone to failure (depending on the internet connection). (Figure 10)

Figure 10 An Ideal situation for CDR Tool and Fraud Detection Tool

8.2.2 Further Requirements for the Fraud Detection Prototype and Development Tool Research

On the Fraud Detection Tool side of the requirements, we have to choose a tool that has the ability to access the data store, the ability to perform extra processing of the data and show the results of the tool's performance. Like previously mentioned, the tool will simply be a prototype, proof of concept as such and therefore will not require a user interface that would normally be the case if we where to develop a system that has been put out to tender.

The Fraud Detection Tool can be visualised as two separate, stages. Gathering the data from the RDBMS; and processing it with the Neural Network.

Figure 11 Processing the data through a neural network

Since the Fraud Detection Tool will require the use of a neural network, there are two options:

- Create a neural network from scratch with a programming language.

- Create a neural network using a tool inside of a package especially designed for prototyping and mathematical work.

It is obvious that the correct choice would be to choose a software package that can simulate a neural network. Since the development of a neural network from scratch would be a separate project in itself due to the many different types of neural networks available, I would have to understand the precise workings of each to ensure they are correct, and doing this would require time that I do not have if I am to create a fraud detection tool.

The requirements for the neural network cannot be as solidly set as those for the CDR Tool, since it is this section which is the research part of the project. To create the final neural network it is a process of making use of many different architectures, different training methods and then combining the results to get a final optimal network.

The design of the neural network and the training methods, along with an overview about neural networks is covered later in the design section of this project.

Luckily, the final neural network must meet several defined requirements:

- It must detect fraud to a reasonable level;

- A final network must be produced, that 'would' be used if the model created apply to what happens in the real world.

- Threshold level must be established to indicate which classification the data is in, i.e. any value above and including 0.75 is clear, whilst anything beneath this value is fraudulent.

After cutting a large swath through the number of potential systems I can create by removing the need to hand develop every neural network system, I can concentrate on developing the prototype by swiftly creating and testing the most suitable networks for the project and establishing which prototype system is more adept at classifying fraudulent customers.

With the ability to swiftly be able to create neural networks, it would be wise to require the system to automate the training the neural networks. Doing this will free myself from having to be involved in the process of creating each network. Once the networks have been trained the system should be able to prune the Neural Networks that cannot classify the results correctly. The nature of Neural networks means we can never guarantee 100% correct classification of the data so we will need some method of visualising the results on completion.

These requirements all point to systems that have either neural net packages included or the ability to install them as an add-on. It comes as no surprise that I am limited to the software that the university has available, these include:

- Matlab

- Visual Basic

- Visual C

There are several tools that aide the production of neural networks, however non that I have found, have the inherent ability to provide statistical functions, data processing, custom function generation and ODBC database connectivity that MATLAB provide. Although it is true that both of the Microsoft Visual programming languages are very flexible and enable rapid prototyping. They unfortunately are not pertinent to the rapid prototyping needed for this project, since many of the statistical functions and matrix operations required for neural network analysis are not provided as standard (Also the quality of neural network packages varies wildly between implementations).

MATLAB on the other hand provides all the data processing functionality required of this project with tried and test neural network packages and ODBC connectivity.

9 Design

9.1 Chapter Summary

This chapter deals with the design of both parts of the system. The CDR (Call Detail Records) Tool and the NN Fraud detection tool. The design is based on the requirements determined during the research and presented in the specification.

This chapter will not deal directly with each algorithm used in the program, it will also not show every data processing stage in detail, rather it will describe the important algorithms used to generate the data; the data it will generate based on model attributes; data that is generated as a consequence to data supplied and generated using input parameters; and an overall flow showing how the system will generate the data for each of the customer in the models.